I have a problem with the “Ansel Adams Fallacy” and “Telephoto Compression” being a function of the distance you view the 2D image. There are problems between predictions and observation as well as trying to make the maths cover what are human perceptual effects.

There are aspects of this subject I don’t mention, but that doesn’t mean that, for example, angle of view of the image doesn’t affect out perception of perspective, it just means that I’m not scrabbling about for a mathematical answer to perceptual issues.

What I’m trying to do is keep it simple and so highlight the limit of the maths and so the nature of perception. And before some quote the title and use it as absolute verified proof that it’s wrong, I may be joking here. Besides the I said/he said method is not really scientific proof…

Ok, so we stand at a point in the landscape and take two photos, one with a 24mm lens and one with a 200mm lens.

If we look entirely at the maths we find that the geometry of image formation predicts that the perspective in both should be identical. And sure enough if we scale and do a pixel overlay of one on top of the other we see that they are indeed identical, (though the telephoto shot doesn’t contain any part of the image we say has wide angle distortion).

Compression/elongation of objects is entirely a function of camera position/distance.

So if we include the human visual system by looking at the two images side by side both at the same dimensions we find that they do not look the same at all.

Why does what we see differ from the maths? We need to look at two things here, the maths, and because we have introduced the human visual system we must at least consider any possible effect.

So we may say, “hang on a minute. The maths says that the image was formed by ray tracing from the actual objects in the scene through the centre of the lens on to the 2D sensor. So you need to define a point in 3D space from which to view the image in order for the maths to be reversed!”

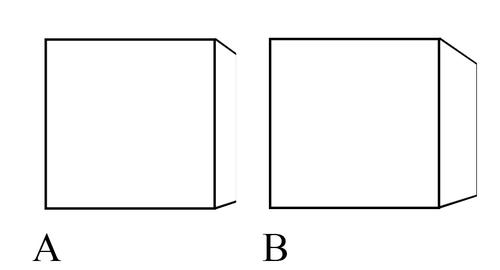

So we go from the formation of the image here:

There is an element of human perception that renders this very difficult to show by visual example. So you’ll have to take it on faith that if you tested this observation in a controlled manner that you would find it to be true. There is a theoretical point at which we can view an image (from the lens in the eye) and see the perspective as natural, or closest to what we saw from the camera position. Further to this there is a direct mathematical relationship between this point and the centre point of the camera lens when taking the photo.

Now it can be very tempting to relate this entirely to the maths of image formation because it gives the neatly symmetrical and logical order people desire. But let’s see why this doesn’t work.

So let's see the actual, and mathematically correct representation of mapping 2D images with a lens, one without the assumptions of perception:

In our modified view we see the mathematical reality of mapping a 2D image to a 2D image via camera, retina, or photocopier is the same, the 2D image gets mapped exactly how it is.

There is no projection of a 3D understanding behind predicted by the maths, that is entirely the desire of the human mind to form that understanding, or relate it to our understanding of the real world.

So the absolute mathematically correct perspective predicted by the geometry of image formation and contained within our image of that distant barn is foreshortened. So maths predicts that we see “telephoto compression” when we view an image at the centre of perspective, but we don’t.

And it’s worth seeing the simple point here:

When we view an image from the centre of perspective we don’t see the perspective as it’s contained in the image.

“Whoa!,” I hear you all gasp (not), “but you said that if we viewed an image from the centre of perspective we see the same perspective as we would if we stood on the same spot as the camera.”

Yes.

“So we don’t see the mathematically correct perspective of the real 3D scene either?”

No, you don’t.

Human vision is empirical, which means we learn by trial and error, memory and experience. Our binocular vision and ability to move within a space means we form a very accurate understanding of our immediate surrounding, but with one important subtraction.

Mathematical perspective dictates that the perception os shape changes with position, close objects elongate, distant ones foreshorten. You can prove this by taking a photo. But we don’t see it as we move through a room, (unless you’re wearing glasses for the first time, perhaps…).

So let’s say it would be confusing to see absolute perspective where objects change shape and distance between increases/decreases as we move about. So to make the world less confusing the brain has learnt to subtract that effect and present us with a view where objects maintain a consistent shape and spacial understanding.

In short, what if we have learnt to subtract the foreshortening of distant objects, how would this affect how we perceive perspective?

Well it would mean that in the real world our understanding of relative shape and distance would remain consistent as we approached objects.

But what would this mean when we view an image? Now in the real world one building only looks half the size when you stand twice as far away, as you move closer that relationship changes. In an image those apparent relationships are fixed, one building is still half the size of the other even when you stand in front of the centre of perspective.

(Now this is a perceptual shift, especially if you are still trying to relate this to a mathematical model)

If we view the image of our barn from the centre of perspective then we subtract the same foreshortening as we would from the real scene. That foreshortening is fixed in the 2D image, it doesn’t change, so as we move closer and subtract less (because we’ve learnt to subtract relative to distance) the barn appears to shrink in depth.

As mentioned before, there are problems with demonstrating this simply because the whole reason our brains do this is to render our understanding consistent so it follows that we would also try to form a consistent understanding of perspective in images. Which it does.

Let’s also be fair here, if we take an image of our barn with an 800mm lens and move further and further away it never takes on a wide angle perspective, it always looks like a distant object. We simply make incorrect assumptions about it’s depth, in the same way that when we view it too close we make the incorrect assumption, the whole definition of “telephoto compression”. Similar with a wide angle shot, as you get closer to the image there isn’t a point where a close object looks like a distant one.

Telephoto compression and wide angle distortion are a function of camera position alone because you can never create them by viewing position alone, even if you viewed your telephoto shot from a county mile away.

Of course the way we see and perceive the world still relates to the mathematical model of perspective, our understanding of the space we occupy would be fairly inaccurate if it didn’t! But our understanding is empirical, we learn through trial and error, memory and experience, not maths.

Perspective is assumed by the viewer, and we don’t always make correct assumptions with more distant objects. The very nature of a 2D object with its fixed relationships means that our assumptions of perspective in images generates different errors, which is why it’s incredibly difficult to determine the exact point that an image was taken by holding that image up to the landscape. There is no exact.

We often assume our understanding is correct and mathematically provable simply because we assume vision is absolute. Even though there is no evidence to suggest that it is, or even that it’s advantageous to us for it to be that way.

Hopefully the above gives some insight.