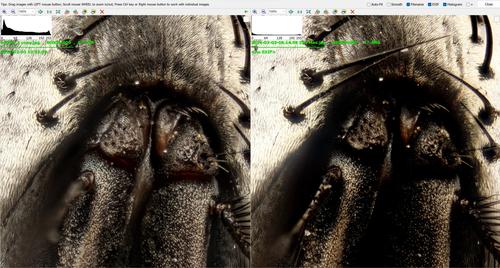

couldnt resist,so just did some tests. so i turned up the contrast and the sharpening a fare bit and jess what ! i gained some detail and lost some detail, the best part is where you see the large hairs disapear against the fine hair. the large hairs are stacked without chopping them off so win win there thats for sure fine setail was a little better but lost some highlight detail. no biggy there, so tomorrow i will make a compromise somewhere in the middle.

-

-

Thank you for the excellent clarification!

-

@GreatBustard has written:

This is very interesting! So, assuming the same number of stacks with the same lens and same settings (which you said was the case) and also assuming both are equally well focused, then I would argue that the difference comes not from the pixel size, but from differences in the AA filter and/or height of the sensor stack.

Had the AA filter situation been reversed, things would be quite different. I actually assumed that was how it was, as many MFRs don't drop AA filters on FF sensors unless they have more than 30MP, and I assumed that only Canon was using AA filters on APS-C sensors with 20 or more MP.

An AA filter, of course, will not allow full luminance contrast to occur between neighbor pixels, so when you have a dark background underneath the brighter hairs, that you see with this fly, the one-pixel-wide black lines are dark grey. That is the problem with comparing pixel densities that are fairly close, especially with AA variability, there will often be subject matter that has scale that is seemingly benefited by one pixel density over the other.

Had Donald used an AA-less 20MP m43, instead of the AA-filtered 6300, then the black lines would have been a bit darker between the hairs, and it would take minimal USM to blacken them if they weren't already showing narrow black crevices, if that's what you want to see.

So, it is frustrating to be an advocate of MUCH HIGHER pixel densities than we currently have, and watch people comparing pixel densities that are only 1.2:1 different in a linear dimension, where there are so many larger wildcards (such as conversion styles, and AA filters and other glass on the sensors, and microlenses) that can obliterate much of the benefit of the 1:1.2 smaller pixel pitch. Change that to a 1:3 ratio, and the higher density will pull way ahead, as any AA filter will have a tiny absolute PSF in microns, on the denser sensor, and it may not even have the filter.

I don't think focus stacking, with all the extra wildcards it presents, is the best thing for demonstrating pixel density ramifications in general. We saw early on that the software was choking on the smaller, AA-filtered pixels, probably because the software was written by someone who liked to input under-sampled images, and the software relied on high neighbor pixel contrast to see what it was doing, or decide what is "in focus". There's no question in my mind, that despite higher density being better on average, AOTBE, for maximum IQ (NOT PQ!), there are certain functional things where higher density is more of a challenge when neighbor-pixel contrast is relied upon, such as the software Don used, which needed more specific control to stack properly with the softer pixels, and we also have very-low-light AF sensitivity being somewhat proportional to pixel size, such as with Canon R bodies, creating a situation where it is easy to get focus and more detail with smaller pixels in good light, but you need to use faster, shorter lenses that put no extra pixels on-subject, to maintain focus ability in very low light.

If it is truly easier to align images that are under-sampled, or easier to determine which frame is most-focused for a given point in the scene, then software could conceivably meet its own demands by taking pixelated versions of the images, determining everything that needs to be determined, and then use that information on the full-res images, but software that doesn't rely on high neighbor pixel contrast, which might be a little slower, might be a better solution, just like AF in low light may be slower with smaller pixels, but there is a potential payoff, if or when you do get accurate focus.

-

@JohnSheehyRev has written:@GreatBustard has written:

This is very interesting! So, assuming the same number of stacks with the same lens and same settings (which you said was the case) and also assuming both are equally well focused, then I would argue that the difference comes not from the pixel size, but from differences in the AA filter and/or height of the sensor stack.

Had the AA filter situation been reversed, things would be quite different. I actually assumed that was how it was, as many MFRs don't drop AA filters on FF sensors unless they have more than 30MP, and I assumed that only Canon was using AA filters on APS-C sensors with 20 or more MP.

An AA filter, of course, will not allow full luminance contrast to occur between neighbor pixels, so when you have a dark background underneath the brighter hairs, that you see with this fly, the one-pixel-wide black lines are dark grey. That is the problem with comparing pixel densities that are fairly close, especially with AA variability, there will often be subject matter that has scale that is seemingly benefited by one pixel density over the other.

Had Donald used an AA-less 20MP m43, instead of the AA-filtered 6300, then the black lines would have been a bit darker between the hairs, and it would take minimal USM to blacken them if they weren't already showing narrow black crevices, if that's what you want to see.

So, it is frustrating to be an advocate of MUCH HIGHER pixel densities than we currently have, and watch people comparing pixel densities that are only 1.2:1 different in a linear dimension, where there are so many larger wildcards (such as conversion styles, and AA filters and other glass on the sensors, and microlenses) that can obliterate much of the benefit of the 1:1.2 smaller pixel pitch. Change that to a 1:3 ratio, and the higher density will pull way ahead, as any AA filter will have a tiny absolute PSF in microns, on the denser sensor, and it may not even have the filter.

I don't think focus stacking, with all the extra wildcards it presents, is the best thing for demonstrating pixel density ramifications in general. We saw early on that the software was choking on the smaller, AA-filtered pixels, probably because the software was written by someone who liked to input under-sampled images, and the software relied on high neighbor pixel contrast to see what it was doing, or decide what is "in focus". There's no question in my mind, that despite higher density being better on average, AOTBE, for maximum IQ (NOT PQ!), there are certain functional things where higher density is more of a challenge when neighbor-pixel contrast is relied upon, such as the software Don used, which needed more specific control to stack properly with the softer pixels, and we also have very-low-light AF sensitivity being somewhat proportional to pixel size, such as with Canon R bodies, creating a situation where it is easy to get focus and more detail with smaller pixels in good light, but you need to use faster, shorter lenses that put no extra pixels on-subject, to maintain focus ability in very low light.

If it is truly easier to align images that are under-sampled, or easier to determine which frame is most-focused for a given point in the scene, then software could conceivably meet its own demands by taking pixelated versions of the images, determining everything that needs to be determined, and then use that information on the full-res images, but software that doesn't rely on high neighbor pixel contrast, which might be a little slower, might be a better solution, just like AF in low light may be slower with smaller pixels, but there is a potential payoff, if or when you do get accurate focus.

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

there is no aa filter on m43 sensors.

"Raw capture on the E-M1 II shows good detail, though not a huge advantage over the original E-M1 (note, the E-M1 suffers from shutter shock until you reach ISO 800 in our comparison as earlier firmware versions did not offer zero-second anti-shock). The E-M1 II is just slightly behind the X-T2 in general, and shows some false color and severe aliasing on white-on-black text due to the lack of an AA filter. Noise performance in low light doesn't show much of an advantage over the E-M1, "

-

@DonaldB has written:@JohnSheehyRev has written:@GreatBustard has written:

This is very interesting! So, assuming the same number of stacks with the same lens and same settings (which you said was the case) and also assuming both are equally well focused, then I would argue that the difference comes not from the pixel size, but from differences in the AA filter and/or height of the sensor stack.

Had the AA filter situation been reversed, things would be quite different. I actually assumed that was how it was, as many MFRs don't drop AA filters on FF sensors unless they have more than 30MP, and I assumed that only Canon was using AA filters on APS-C sensors with 20 or more MP.

<snip>

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

The problem with the question is that the "actural size of the COC" is an arbitrary value chosen by different sources in order to gauge a Depth of Field.

Some sources base CoC on a given number of pixels, others base it on lens data from Zeiss, others choose a fraction of the sensor diagonal, others just use a common value for everything. That makes any response to the question moot, I reckon.

Head to an AI facility and ask "what is the CoC of a camera?" (kidding).

-

@xpatUSA has written:@DonaldB has written:@JohnSheehyRev has written:@GreatBustard has written:

This is very interesting! So, assuming the same number of stacks with the same lens and same settings (which you said was the case) and also assuming both are equally well focused, then I would argue that the difference comes not from the pixel size, but from differences in the AA filter and/or height of the sensor stack.

Had the AA filter situation been reversed, things would be quite different. I actually assumed that was how it was, as many MFRs don't drop AA filters on FF sensors unless they have more than 30MP, and I assumed that only Canon was using AA filters on APS-C sensors with 20 or more MP.

<snip>

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

The problem with the question is that the "actural size of the COC" is an arbitrary value chosen by different sources in order to gauge a Depth of Field.

Some sources base CoC on a given number of pixels, others base it on lens data from Zeiss, others choose a fraction of the sensor diagonal, others just use a common value for everything. That makes any response to the question moot, I reckon.

Head to an AI facility and ask "what is the CoC of a camera?" (kidding).

we are talking about the visable light that can pass through CoC (airy disk) at high magnifacations.

nif.hms.harvard.edu/sites/nif.hms.harvard.edu/files/education-files/Fundamentals%20in%20Optics_0.pdf

-

@DonaldB has written:

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

You frequently quoted this page at DPReview as evidence of an ideal pixel size:

amscope.com/pages/camera-resolution

"It is arguable that a 1:1 ratio is sufficient to resolve the image, but a 1:2 ratio would be preferable. This means that the pixel-size should be no larger than half the magnified-resolution."

It's advising you not to go larger than that. The pixel size can of course be smaller in order to retain better detail (or at least the same detail) because any diffraction that occurs will still only occupy the same percentage of the image area. The claim that there is some 'ideal' pixel size for this purpose was disputed at DPReview the last time it came up:

www.dpreview.com/forums/thread/4734289?page=5#forum-post-67341358

-

@sybersitizen has written:@DonaldB has written:

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

You frequently quoted this page at DPReview as evidence of an ideal pixel size:

amscope.com/pages/camera-resolution

"It is arguable that a 1:1 ratio is sufficient to resolve the image, but a 1:2 ratio would be preferable. This means that the pixel-size should be no larger than half the magnified-resolution."

It's advising you not to go larger than that. The pixel size can of course be smaller in order to retain better detail (or at least the same detail) because any diffraction that occurs will still only occupy the same percentage of the image area. The claim that there is some 'ideal' pixel size for this purpose was disputed at DPReview the last time it came up:

www.dpreview.com/forums/thread/4734289?page=5#forum-post-67341358

its always disputed , because no one can take a 10x image to prove anything accept for me.

thanks for the link. -

@DonaldB has written:@sybersitizen has written:@DonaldB has written:

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

You frequently quoted this page at DPReview as evidence of an ideal pixel size:

amscope.com/pages/camera-resolution

"It is arguable that a 1:1 ratio is sufficient to resolve the image, but a 1:2 ratio would be preferable. This means that the pixel-size should be no larger than half the magnified-resolution."

It's advising you not to go larger than that. The pixel size can of course be smaller in order to retain better detail (or at least the same detail) because any diffraction that occurs will still only occupy the same percentage of the image area. The claim that there is some 'ideal' pixel size for this purpose was disputed at DPReview the last time it came up:

www.dpreview.com/forums/thread/4734289?page=5#forum-post-67341358

its always disputed , because no one can take a 10x image to prove anything accept for me.

Just about anybody can, though hardly anybody chooses to.

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

Quoted message:thanks for the link.

You're welcome.

-

@sybersitizen has written:@DonaldB has written:@sybersitizen has written:@DonaldB has written:

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

You frequently quoted this page at DPReview as evidence of an ideal pixel size:

amscope.com/pages/camera-resolution

"It is arguable that a 1:1 ratio is sufficient to resolve the image, but a 1:2 ratio would be preferable. This means that the pixel-size should be no larger than half the magnified-resolution."

It's advising you not to go larger than that. The pixel size can of course be smaller in order to retain better detail (or at least the same detail) because any diffraction that occurs will still only occupy the same percentage of the image area. The claim that there is some 'ideal' pixel size for this purpose was disputed at DPReview the last time it came up:

www.dpreview.com/forums/thread/4734289?page=5#forum-post-67341358

its always disputed , because no one can take a 10x image to prove anything accept for me.

Just about anybody can, though hardly anybody chooses to.

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

Quoted message:thanks for the link.

You're welcome.

i posted some images on here, dont ask how we got into the discussion on that thread 🤔🤨

-

@DonaldB has written:@xpatUSA has written:@DonaldB has written:@JohnSheehyRev has written:@GreatBustard has written:

This is very interesting! So, assuming the same number of stacks with the same lens and same settings (which you said was the case) and also assuming both are equally well focused, then I would argue that the difference comes not from the pixel size, but from differences in the AA filter and/or height of the sensor stack.

Had the AA filter situation been reversed, things would be quite different. I actually assumed that was how it was, as many MFRs don't drop AA filters on FF sensors unless they have more than 30MP, and I assumed that only Canon was using AA filters on APS-C sensors with 20 or more MP.

<snip>

thanks for the explaination. can you now explain how smaller pixels are supose to capture finer detail due to the actural size of the COC ,this is why larger pixels are used for microscope photography. i have read many papers on this i just cant find them atm.

The problem with the question is that the "actural size of the COC" is an arbitrary value chosen by different sources in order to gauge a Depth of Field.

Some sources base CoC on a given number of pixels, others base it on lens data from Zeiss, others choose a fraction of the sensor diagonal, others just use a common value for everything. That makes any response to the question moot, I reckon.

Head to an AI facility and ask "what is the CoC of a camera?" (kidding).

we are talking about the visable light that can pass through CoC (airy disk) at high magnifacations.

nif.hms.harvard.edu/sites/nif.hms.harvard.edu/files/education-files/Fundamentals%20in%20Optics_0.pdf

Neither "CoC" nor "airy disk" appear in the above link, so I was forced to ask my new buddy:

Me

"is an Airy disk or disc the same size as a circle of confusion?"ChatGPT

"The Airy disk and the circle of confusion are related optical concepts, but they refer to different phenomena and are not the same in size."In other words, your "CoC (airy disk)" is incorrect and I am beginning to wonder if you know what either of them mean.

It appears that you have found a new meaning of "CoC" which everybody except you knows stands for "Circle of Confusion"

I'm hoping that you are not being deliberately obtuse and are just confused by specific technicalities ...

-

@DonaldB has written:@sybersitizen has written:

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

i posted some images on here, dont ask how we got into the discussion on that thread 🤔🤨

Those were taken with two different sensor sizes, and I can't tell what you did there. One of them doesn't even seem to be in focus. Also, they're stacks made from multiple images, aren't they? If so, that adds a variable that voids the strict analysis of pixel size comparisons. Comparisons have to be rigorously controlled to be meaningful.

-

@sybersitizen has written:@DonaldB has written:@sybersitizen has written:

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

i posted some images on here, dont ask how we got into the discussion on that thread 🤔🤨

Those were taken with two different sensor sizes, and I can't tell what you did there. One of them doesn't even seem to be in focus. Also, they're stacks made from multiple images, aren't they? If so, that adds a variable that voids the strict analysis of pixel size comparisons. Comparisons have to be rigorously controlled to be meaningful.

show me a better setup in the world about controlled enviroment for shooting live 10x subjects 🙄😊😂

please download for ultimate viewing pleasure. the black boarder is 1mm -

@DonaldB has written:

we are talking about the visable light that can pass through CoC (airy disk) at high magnifacations.

Just so you know, that doesn't make any sense. No light passes through the CoC or the Airy Disk, neither of which are physical, where the CoC and Airy Disk are two totally different things, anyway.

Quoted message:nif.hms.harvard.edu/sites/nif.hms.harvard.edu/files/education-files/Fundamentals%20in%20Optics_0.pdf

What part of that 40 page document are you referring to? Can you copy and paste it? Let me give a quote from a link (feel free to read the whole page, though!):

www.scantips.com/lights/diffraction.html

The lens image was created containing the added diffraction detail, and it is what it is, just another image. The sensor does not care what the image is, it's all simply detail, colors and intensities actually. The sensor adds a grid of pixels onto that image. The digital sensor reproduces the image by sampling colors of many areas (the pixels), the more pixels, the better for resolving finer detail. Diffraction is not possibly aligned centered on pixels anyway, but if the detail spills into neighboring pixels, then those pixels will simply reproduce the color of whatever they see there. If some specific detail is already big, the sampling will not make it bigger. The role of more pixels is to simply better reproduce the finer detail in that image. More smaller pixels show the existing detail better, but pixels do not create any detail (all detail is already in the lens image, each pixel simply records the color it sees in its area). Regardless of what the detail is, more and smaller pixels are always good for better reproduction of that detail. All detail is created by the lens. Recording that detail with more pixels certainly DOES NOT limit detail, more smaller pixels simply reproduce the existing detail better, with greater precision, showing finer detail within it (detail within the detail, so to speak). That's pretty basic. *Yes, larger diffraction is a problem, because it's larger, but growing into adjacent pixels is not an additional problem. It was already larger. Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

-

@GreatBustard has written:@DonaldB has written:

we are talking about the visable light that can pass through CoC (airy disk) at high magnifacations.

Just so you know, that doesn't make any sense. No light passes through the CoC or the Airy Disk, neither of which are physical, where the CoC and Airy Disk are two totally different things, anyway.

Quoted message:nif.hms.harvard.edu/sites/nif.hms.harvard.edu/files/education-files/Fundamentals%20in%20Optics_0.pdf

What part of that 40 page document are you referring to? Can you copy and paste it? Let me give a quote from a link (feel free to read the whole page, though!):

www.scantips.com/lights/diffraction.html

The lens image was created containing the added diffraction detail, and it is what it is, just another image. The sensor does not care what the image is, it's all simply detail, colors and intensities actually. The sensor adds a grid of pixels onto that image. The digital sensor reproduces the image by sampling colors of many areas (the pixels), the more pixels, the better for resolving finer detail. Diffraction is not possibly aligned centered on pixels anyway, but if the detail spills into neighboring pixels, then those pixels will simply reproduce the color of whatever they see there. If some specific detail is already big, the sampling will not make it bigger. The role of more pixels is to simply better reproduce the finer detail in that image. More smaller pixels show the existing detail better, but pixels do not create any detail (all detail is already in the lens image, each pixel simply records the color it sees in its area). Regardless of what the detail is, more and smaller pixels are always good for better reproduction of that detail. All detail is created by the lens. Recording that detail with more pixels certainly DOES NOT limit detail, more smaller pixels simply reproduce the existing detail better, with greater precision, showing finer detail within it (detail within the detail, so to speak). That's pretty basic. *Yes, larger diffraction is a problem, because it's larger, but growing into adjacent pixels is not an additional problem. It was already larger. Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

so shooting at f45 m43 sensor is great and no difraction 🤔

-

@DonaldB has written:@GreatBustard has written:@DonaldB has written:

we are talking about the visable light that can pass through CoC (airy disk) at high magnifacations.

Just so you know, that doesn't make any sense. No light passes through the CoC or the Airy Disk, neither of which are physical, where the CoC and Airy Disk are two totally different things, anyway.

Quoted message:nif.hms.harvard.edu/sites/nif.hms.harvard.edu/files/education-files/Fundamentals%20in%20Optics_0.pdf

What part of that 40 page document are you referring to? Can you copy and paste it? Let me give a quote from a link (feel free to read the whole page, though!):

www.scantips.com/lights/diffraction.html

The lens image was created containing the added diffraction detail, and it is what it is, just another image. The sensor does not care what the image is, it's all simply detail, colors and intensities actually. The sensor adds a grid of pixels onto that image. The digital sensor reproduces the image by sampling colors of many areas (the pixels), the more pixels, the better for resolving finer detail. Diffraction is not possibly aligned centered on pixels anyway, but if the detail spills into neighboring pixels, then those pixels will simply reproduce the color of whatever they see there. If some specific detail is already big, the sampling will not make it bigger. The role of more pixels is to simply better reproduce the finer detail in that image. More smaller pixels show the existing detail better, but pixels do not create any detail (all detail is already in the lens image, each pixel simply records the color it sees in its area). Regardless of what the detail is, more and smaller pixels are always good for better reproduction of that detail. All detail is created by the lens. Recording that detail with more pixels certainly DOES NOT limit detail, more smaller pixels simply reproduce the existing detail better, with greater precision, showing finer detail within it (detail within the detail, so to speak). That's pretty basic. *Yes, larger diffraction is a problem, because it's larger, but growing into adjacent pixels is not an additional problem. It was already larger. Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

so shooting at f45 m43 sensor is great and no difraction 🤔

so which image is shot with m43 ? both 14 meg images.

-

@GreatBustard has written:

Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

I feel it all comes down to how well can we resolve the actual blur spot, this would take several photo sites because with how we create an image.

When most people think of the limit of the AD to be about the size of the pixel

But the reality is that we are recording color so it takes more than just one site

is this better I would think not as we still really are not mapping the AD

how about now? probably not as AD do not just align with the sites that record our image. they are overlapping many AD throughout the image -

@DonaldB has written:@GreatBustard has written:

www.scantips.com/lights/diffraction.html

The lens image was created containing the added diffraction detail, and it is what it is, just another image. The sensor does not care what the image is, it's all simply detail, colors and intensities actually. The sensor adds a grid of pixels onto that image. The digital sensor reproduces the image by sampling colors of many areas (the pixels), the more pixels, the better for resolving finer detail. Diffraction is not possibly aligned centered on pixels anyway, but if the detail spills into neighboring pixels, then those pixels will simply reproduce the color of whatever they see there. If some specific detail is already big, the sampling will not make it bigger. The role of more pixels is to simply better reproduce the finer detail in that image. More smaller pixels show the existing detail better, but pixels do not create any detail (all detail is already in the lens image, each pixel simply records the color it sees in its area). Regardless of what the detail is, more and smaller pixels are always good for better reproduction of that detail. All detail is created by the lens. Recording that detail with more pixels certainly DOES NOT limit detail, more smaller pixels simply reproduce the existing detail better, with greater precision, showing finer detail within it (detail within the detail, so to speak). That's pretty basic. *Yes, larger diffraction is a problem, because it's larger, but growing into adjacent pixels is not an additional problem. It was already larger. Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

so shooting at f45 m43 sensor is great and no difraction 🤔

No one said, or implied, any such thing. What was said is that whatever detail there is, comes from the lens, and that more smaller pixels simply record that detail, whatever that detail may be, more accurately.

Quoted message:so which image is shot with m43 ? smaller pixels bothe 14 meg images.

We're not saying that the FF photo is less detailed. We're saying the reason isn't larger (or fewer) pixels -- the reason is something else (e.g. AA filter, the depth of the filter stack, the way the software processes the photos, etc., etc., etc..). More smaller pixels will always result in more detail all else equal. Since they don't appear to be doing so in the examples you're posting, then the cause lies elsewhere, and it would be informative to find out what is resulting in the discrepancy.

Basically, Don, it's like this -- your taxes went up and you're saying it's because of illegals crossing the border, which is absolutely not true, as opposed to any number of actual reasons that caused your taxes to increase.