but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43. all the microscope sites say the same. large pixels are better ,theres no argument unless you want to post some of your 10x shots to prove me wrong 😜

-

-

@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

First you have to understand why FF f16 is equal to f45 on m43? here is a hint it has nothing to do with the pixel size

-

@IanSForsyth has written:@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

First you have to understand why FF f16 is equal to f45 on m43? here is a hint it has nothing to do with the pixel size

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg 🤔

-

@DonaldB has written:

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg

What is causing the diffraction is it the sensor or is it the lens and how much it has been stopped down ?

If it is the lens then why would the sensor behind it cause more diffraction? -

@IanSForsyth has written:@DonaldB has written:

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg

What is causing the diffraction is it the sensor or is it the lens and how much it has been stopped down ?

If it is the lens then why would the sensor behind it cause more diffraction?last word on the subject

“Diffraction is related to pixel size, not sensor size. The smaller the pixels the sooner diffraction effects will be noticed.

blog.kasson.com/the-last-word/diffraction-and-sensors/#:~:text=%E2%80%9CDiffraction%20is%20related%20to%20pixel,of%20the%20same%20sensor%20dimensions.%E2%80%9D

-

@DonaldB has written:

“Diffraction is related to pixel size, not sensor size. The smaller the pixels the sooner diffraction effects will be noticed.

Do you even read what you link to

blog.kasson.com/the-last-word/diffraction-and-sensors/#

"The first conflation is the lack of distinction between the effects of diffraction and the visibility of those effects. The size of the Airy disk is not a function of sensor size, pixel pitch, or pixel aperture. The size of the Airy disk on the sensor is a function of wavelength and f-stop. That’s all. The size of the Airy disk on the print is a function of both those, plus the ratio of sensor size to print size." -

@IanSForsyth has written:@DonaldB has written:

“Diffraction is related to pixel size, not sensor size. The smaller the pixels the sooner diffraction effects will be noticed.

Do you even read what you link to

blog.kasson.com/the-last-word/diffraction-and-sensors/#

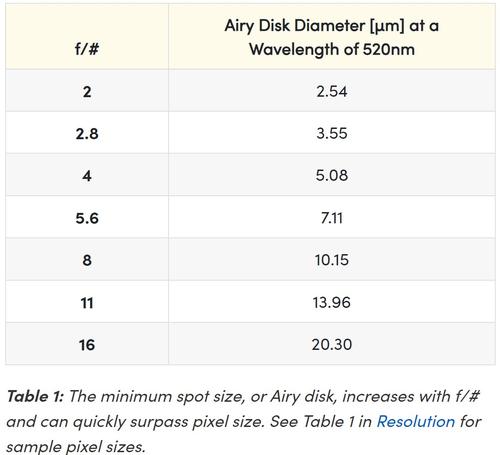

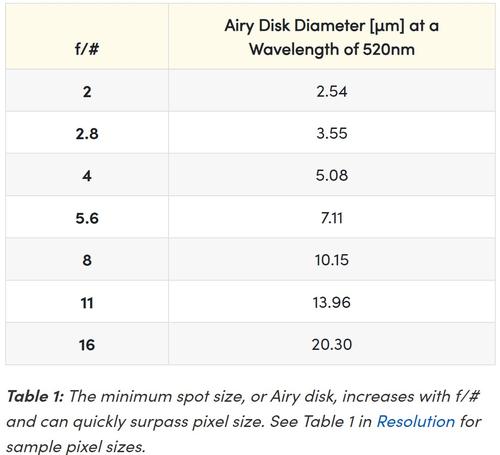

"The first conflation is the lack of distinction between the effects of diffraction and the visibility of those effects. The size of the Airy disk is not a function of sensor size, pixel pitch, or pixel aperture. The size of the Airy disk on the sensor is a function of wavelength and f-stop. That’s all. The size of the Airy disk on the print is a function of both those, plus the ratio of sensor size to print size."edmond optics site

f4 to 5um

-

@GreatBustard has written:@DonaldB has written:@GreatBustard has written:

www.scantips.com/lights/diffraction.html

The lens image was created containing the added diffraction detail, and it is what it is, just another image. The sensor does not care what the image is, it's all simply detail, colors and intensities actually. The sensor adds a grid of pixels onto that image. The digital sensor reproduces the image by sampling colors of many areas (the pixels), the more pixels, the better for resolving finer detail. Diffraction is not possibly aligned centered on pixels anyway, but if the detail spills into neighboring pixels, then those pixels will simply reproduce the color of whatever they see there. If some specific detail is already big, the sampling will not make it bigger. The role of more pixels is to simply better reproduce the finer detail in that image. More smaller pixels show the existing detail better, but pixels do not create any detail (all detail is already in the lens image, each pixel simply records the color it sees in its area). Regardless of what the detail is, more and smaller pixels are always good for better reproduction of that detail. All detail is created by the lens. Recording that detail with more pixels certainly DOES NOT limit detail, more smaller pixels simply reproduce the existing detail better, with greater precision, showing finer detail within it (detail within the detail, so to speak). That's pretty basic. *Yes, larger diffraction is a problem, because it's larger, but growing into adjacent pixels is not an additional problem. It was already larger. Anything that can be resolved is larger than one pixel. Don't worry about pixel size affecting diffraction resolution. Be glad to have the pixels, and worry about the diffraction instead.

so shooting at f45 m43 sensor is great and no difraction 🤔

No one said, or implied, any such thing. What was said is that whatever detail there is, comes from the lens, and that more smaller pixels simply record that detail, whatever that detail may be, more accurately.

Quoted message:so which image is shot with m43 ? smaller pixels bothe 14 meg images.

We're not saying that the FF photo is less detailed. We're saying the reason isn't larger (or fewer) pixels -- the reason is something else (e.g. AA filter, the depth of the filter stack, the way the software processes the photos, etc., etc., etc..). More smaller pixels will always result in more detail all else equal. Since they don't appear to be doing so in the examples you're posting, then the cause lies elsewhere, and it would be informative to find out what is resulting in the discrepancy.

Basically, Don, it's like this -- your taxes went up and you're saying it's because of illegals crossing the border, which is absolutely not true, as opposed to any number of actual reasons that caused your taxes to increase.

this is my tax guide.

my pixels are 5um my objectives are f4 I have balenced my books 🤨😎😜

www.edmundoptics.es/knowledge-center/application-notes/imaging/limitations-on-resolution-and-contrast-the-airy-disk/#:~:text=Every%20lens%20has%20an%20upper,will%20still%20be%20diffraction%20limited.

-

-

@IanSForsyth has written:@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

First you have to understand why FF f16 is equal to f45 on m43?

Nope.

-

@xpatUSA has written:@IanSForsyth has written:@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

First you have to understand why FF f16 is equal to f45 on m43?

Nope.

did i miss something i didnt see any reference to microscope objectives.

-

@DonaldB has written:@xpatUSA has written:@IanSForsyth has written:@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

First you have to understand why FF f16 is equal to f45 on m43?

Nope.

did i miss something i didnt see any reference to microscope objectives.

microscopecentral.com/pages/choosing-a-microscope-camera-what-to-look-for

but i would read the whole page. 😁

Quote: Although it seems counter-intuitive, higher magnification objectives actually require fewer pixels. In fact, if you are working at a higher magnification, the optical system is limited to about 3–5 Megapixels that can be transferred to the sensor of a camera. Therefore, if you go out and purchase that 20 Megapixel camera hoping to maximize clarity, know that the “extra pixels” will have NO EFFECT on the resolution of the image, while negatively impacting speed, capacity and sensitivity. However, for lower magnification, a higher pixel count will capture more detail from your microscope.

-

@DonaldB has written:@sybersitizen has written:@DonaldB has written:@sybersitizen has written:

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

i posted some images on here, dont ask how we got into the discussion on that thread 🤔🤨

Those were taken with two different sensor sizes, and I can't tell what you did there. One of them doesn't even seem to be in focus. Also, they're stacks made from multiple images, aren't they? If so, that adds a variable that voids the strict analysis of pixel size comparisons. Comparisons have to be rigorously controlled to be meaningful.

show me a better setup in the world about controlled enviroment for shooting live 10x subjects 🙄😊😂

Having a controlled environment is not the same as controlling all the variables in a testing methodology. You clearly did not eliminate extraneous variables so that only pixel size varied. The exposures look different, the focus points look inconsistent, and you introduced variables associated with image stacking.

-

@DonaldB has written:

but thats the whole point my microscope objectives are eq to f16 ff which is equal to f45 m43.

I thought it was the other way around...

-

@sybersitizen has written:@DonaldB has written:@sybersitizen has written:@DonaldB has written:@sybersitizen has written:

If you could take a 10x image with a much higher resolution full frame camera, then we could see a meaningful comparison between that and your present camera. There's no reason to doubt that the effects of diffraction would be no worse if both were viewed at the same size, and the higher resolution version might be visibly better.

i posted some images on here, dont ask how we got into the discussion on that thread 🤔🤨

Those were taken with two different sensor sizes, and I can't tell what you did there. One of them doesn't even seem to be in focus. Also, they're stacks made from multiple images, aren't they? If so, that adds a variable that voids the strict analysis of pixel size comparisons. Comparisons have to be rigorously controlled to be meaningful.

show me a better setup in the world about controlled enviroment for shooting live 10x subjects 🙄😊😂

Having a controlled environment is not the same as controlling all the variables in a testing methodology. You clearly did not eliminate extraneous variables so that only pixel size varied. The exposures look different, the focus points look inconsistent, and you introduced variables associated with image stacking.

yeh. i have no idea what im doing 🙄

-

@DonaldB has written:

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg 🤔

Where did you get the idea that the om1 is 26MP sensor, and where did you get the idea that the GH5 is 10MP sensor???

They are both 20MP...

-

@Bryan has written:@DonaldB has written:

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg 🤔

Where did you get the idea that the om1 is 26MP sensor, and where did you get the idea that the GH5 is 10MP sensor???

They are both 20MP...

The Panasonic GH5S is a video-focused Micro Four Thirds camera built around what the company markets as a 10.2MP sensor.

gh6 26 meg, been a while

-

@DonaldB has written:@Bryan has written:@DonaldB has written:

so are you saying that the om1 26 meg sensor has the same diffraction limit to the gh5s 10 meg 🤔

Where did you get the idea that the om1 is 26MP sensor, and where did you get the idea that the GH5 is 10MP sensor???

They are both 20MP...

The Panasonic GH5S is a video-focused Micro Four Thirds camera built around what the company markets as a 10.2MP sensor.

gh6 26 meg, been a while

Ok I wasn't aware of the S version. But why even mention a "video centric" version in this discussion???