Of course, "die" and "soon" have a lot of latitude, but here's what I'm thinking. I was at an art show not long ago, and there were a few photographers there selling prints for really high prices (by "high", I mean, even if I liked the photo a lot, there's no way I'd pay that price for it). The vast majority of the photos bordered on digital art. That is, you could tell that they were heavily processed. So much so, that my wife was really put off by most of it, whereas I just took it to mean that that's the style that sells now -- at least, it's the style that sells to people who will pay "that much" for a photo.

Anyway, myself, I almost always strive for technical perfection, and do a fair amount of processing on my photos (where by "processing", I mean getting the parameters for the RAW conversion "just right", sometimes trying multiple conversions and merging with various weights, etc.), but I usually shy away from using settings that take my photo into the realm of "digital art", although that, of course, is subject to the viewer's aesthetics, but certainly less than what I saw at the art show.

However, I got to thinking. What if I just snapped a pic of the scene with my smartphone, and there were software so advanced that it could make it have the same "high IQ" as my 45 MP FF camera? And, using AI, I could also just tell it how to tweak the photo to get the look I want, just like I tweak the parameters in the RAW conversion, but using natural language? And then the resulting photo actually looks like a photo -- not some AI monstrosity or highly polished piece of digital art, but actually looks just like a photo? With [AI interpolated] resolution as high as I want it?

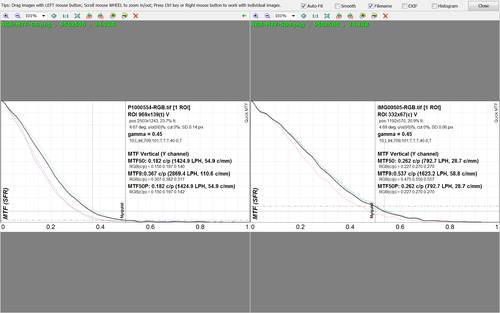

We're not there yet. I don't think there's any software that can take a photo from a smartphone and make it just as good (IQ-wise) as a photo from a high MP FF camera at 100% viewing enlargement. I mean, sure, smartphone photos are often already "as good as" dedicated camera photos when viewed on a phone, and sometimes smartphones stack photos to get even a higher IQ photo than a single exposure from a dedicated camera, but as a general rule, "large" sensor cameras produce photos with "significantly higher IQ".

But I think that might no longer be the case in the very near future. I'm thinking that a smartphone photo combined with AI software will be able to produce results as good as and as "natural looking" as those from even a 45 MP FF digital camera. In fact, they might even be "better" since the AI processing will be far, far, far more able than the vast number of photographers can manage.

This will be possible because the software will be able to create realistic looking fake detail. And it would be able to remove detail better, too. That is, it could create the illusion of shallow DOF with "perfect" bokeh, if you so desired. It could make a still shot look perfectly panned with motion blur. It could do anything I could do, and do it better.

Now, for sure, there will still be individuals out there who will excel, and outdo the software. But the rest of us mere mortals won't be able to. And more than that, the rest of us mortals couldn't tell the difference, anyway, unless the photos were side-by-side, and even then we'd get it wrong half the time which was "real" and which was "AI enhanced".

In short, my rush to get what I consider my "last camera" and "last lenses" because what's out there now is so good that even though there will always be better, what exists now is more than good enough that better won't make any difference to my photography, in that rush, I think I forgot that I might be carrying my "last camera" with me all the time, while I text and browse Reddit with it.