Just think what AI will be able to do in 5 more years, much less 10!

-

-

AI will never be able to "think"

-

@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

-

@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

robotic machines have not taken over industry and havent for 40 years, a human can still run rings around robotic machines. in fact excel back in the 80s when i was working for apple was better than the current excel i have on my windows 11pc, we have gone backwards. the only difference is things look pretty to day. i used to design MRP systems that could store/process/ recover 1 million transactions from 10 different file systems on a 20 meg hard drive at lightning speeds. are we doing that today 🤔

-

@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

Given who the Americans voted for, I should think that AI working differently than the human brain would be a plus, to tell the truth. 😟

That aside, the AI-aided reconstruction of the photo of the person's wife I posted above tells me that if "realistic" photography is your goal, then a smartphone will soon be able to do what the best cameras available today can do. If creative photography is your goal, then AI will offer significant tools to allow your creativity to do far more than it could before.

-

@GreatBustard has written:@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

Given who the Americans voted for, I should think that AI working differently than the human brain would be a plus, to tell the truth. 😟

That aside, the AI-aided reconstruction of the photo of the person's wife I posted above tells me that if "realistic" photography is your goal, then a smartphone will soon be able to do what the best cameras available today can do. If creative photography is your goal, then AI will offer significant tools to allow your creativity to do far more than it could before.

what happened to her hand ?

AI couldnt guess she was holding a dive mask.

-

@DonaldB has written:

...it all sounds great but you missed on perspective distortion which AI will never be able do especially on faces and people. i also noticed my daughters iphone 6 was using iso 25. i also have noticed that AI is "fake" its not intelligent its copying and not creating. there are no images like my extreme macro anywhere in the world so AI has nothing to copy.

With proper training, an advanced AI can do anything you can imagine and much more. As has already been demonstrated in various fields, AI finds solutions that people never even realize could exist.

For example, an AI can be asked to simulate any perspective distortion produced by any lens ever put on the market, and it can be asked to adjust any image according to the users' wishes. The only real problems are the cost and usefulness of such investments.😀

-

@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

"Reasoning and Logic:

Advanced (AI) models can perform logical reasoning, solve riddles, or answer questions requiring multi-step reasoning, despite no explicit focus on these tasks during training."

Emergent Behavior in Generative AI -

@DonaldB has written:

what happened to her hand ?

AI couldnt guess she was holding a dive mask.

Not yet. Five years, ten on the outside. And it will be a lot more able than just that. A good way to think about it is self driving cars. Once the reliability of the system results in fewer accidents overall than human drivers, it is the better solution. Yes, AI will always fail from time to time, but on the whole, it will do significantly better than humans can do.

So, sure, for this photo or that photo, someone may still be able to do the better job processing than AI. But AI will do better than even the best of them most of the time, and certainly better than the rest of us pretty much all the time. For example:

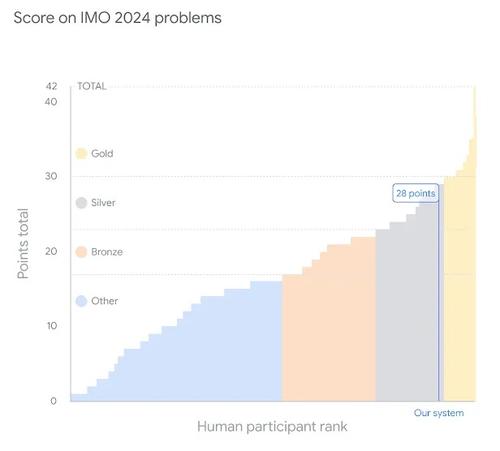

deepmind.google/discover/blog/ai-solves-imo-problems-at-silver-medal-level/

And that's now -- AI is outperforming elite mathematicians more often than not. So, whether you like it or not, Don, it's coming. Yes, it will be humans for the win every now and then, but it will only be every now and then. What people will do in response is change the definition of "the win". Photography will likely continue the same as it's been going with regards to photos taken with smartphones (the superior AI will be seen as an advancement as opposed to revolution, as current smartphone photos are already more than "good enough" for most people most of the time) and for the "purist" crowd, it may be back to film, or, at the very least, OOC jpgs for "authenticity", and then we'll be seeing debates galore about which camera has the best OOC jpg engine, only to find that the "best" one is AI powered. : )

-

@DonaldB has written:

AI will never be able to "think"

Thinking's over-rated. Just ask Humans.

They are hard-wired to fill gaps, see things that aren't there, and make decisions not based on ALL the facts.

It's got us to where we are. Research proves it, our brains cut corners.

Now those same brains are being asked to make a judgement call on, when is near enough, good enough; for a technology that very soon will be making decisions based on massive amounts more data than we use ourselves.

So which will be more desirable; "human" or "better than human" ?

We ARE going to be left behind and those who currently have "control" WILL lose it , probably in some hilarious twist so beloved by science fiction writers.

Asimov's three rules for robots are long forgotten, just look at the Ukraine.

Point is, our brains can't visualise what's next, so, one game at a time, boys, one game at a time.

Time for a peat drink, methinks. -

@RonP has written:@DonaldB has written:

AI will never be able to "think"

Thinking's over-rated. Just ask Humans.

They are hard-wired to fill gaps, see things that aren't there, and make decisions not based on ALL the facts.

It's got us to where we are. Research proves it, our brains cut corners.

Now those same brains are being asked to make a judgement call on, when is near enough, good enough; for a technology that very soon will be making decisions based on massive amounts more data than we use ourselves.

So which will be more desirable; "human" or "better than human" ?

We ARE going to be left behind and those who currently have "control" WILL lose it , probably in some hilarious twist so beloved by science fiction writers.

Asimov's three rules for robots are long forgotten, just look at the Ukraine.

Point is, our brains can't visualise what's next, so, one game at a time, boys, one game at a time.

Time for a peat drink, methinks.my daughter just started her second degree to become a natropath, she is a science/biology/pys ed teacher atm. her first week back she took on the whole class and the lecturer on the cons of AI in the classroom. the class didnt have a chance, the lecturer said to her after the class he had never seen anyone do anything like that in the past, she has an amazing mind for a 22 yo, she said she did it because she can 🫣🤨 you must remember humans also get better as the generations pass. other wise we would still be in caves. when we can build a flying eating self fueled insect 2mm in size i will agree with you 😁

-

@GreatBustard has written:@DonaldB has written:

what happened to her hand ?

AI couldnt guess she was holding a dive mask.

Not yet. Five years, ten on the outside. And it will be a lot more able than just that. A good way to think about it is self driving cars. Once the reliability of the system results in fewer accidents overall than human drivers, it is the better solution. Yes, AI will always fail from time to time, but on the whole, it will do significantly better than humans can do.

So, sure, for this photo or that photo, someone may still be able to do the better job processing than AI. But AI will do better than even the best of them most of the time, and certainly better than the rest of us pretty much all the time. For example:

deepmind.google/discover/blog/ai-solves-imo-problems-at-silver-medal-level/

And that's now -- AI is outperforming elite mathematicians more often than not. So, whether you like it or not, Don, it's coming. Yes, it will be humans for the win every now and then, but it will only be every now and then. What people will do in response is change the definition of "the win". Photography will likely continue the same as it's been going with regards to photos taken with smartphones (the superior AI will be seen as an advancement as opposed to revolution, as current smartphone photos are already more than "good enough" for most people most of the time) and for the "purist" crowd, it may be back to film, or, at the very least, OOC jpgs for "authenticity", and then we'll be seeing debates galore about which camera has the best OOC jpg engine, only to find that the "best" one is AI powered. : )

oof camera jpegs are at there best now, last year i put up a chalenge to the sony forum as to who could process a better quality image from a raw file than that of camera jpeg. camera jpeg won ,untill i posted the jpeg image so everyone could compare there raw edits to it and make critical changes.

-

@DonaldB has written:@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

robotic machines have not taken over industry and havent for 40 years, a human can still run rings around robotic machines. in fact excel back in the 80s when i was working for apple was better than the current excel i have on my windows 11pc, we have gone backwards. the only difference is things look pretty to day. i used to design MRP systems that could store/process/ recover 1 million transactions from 10 different file systems on a 20 meg hard drive at lightning speeds. are we doing that today 🤔

AI is much better than me at searching through loads of sites, reading all the results and trying to sort the wheat from the chaff.

-

@DonaldB has written:@GreatBustard has written:@NCV has written:

This is a pretty amazing reconstruction. Topaz has been doing this sort of thing in a lesser way for some time, but this seems to be much better.

Just think what AI will be able to do in 5 more years, much less 10!

AI will never be able to "think"

I think it is perfectly reasonable to be suspicious of the AI hype, like any other hype. Current AI has clear areas of weakness and who knows whether they will become worse eg when AI gets trained on AI produced datasets ('slop', I think they call it). But there is a long tradition of people saying 'never' about new technologies and ending up with egg on their face...

-

@xpatUSA has written:@DonaldB has written:@ArvoJ has written:@DonaldB has written:

AI will never be able to "think"

This 'never' is disputable - we currently do not know, how human brain thinks; it may be emergent phenomenon of similar kind of information processing what AI currently does - or something completely different.

robotic machines have not taken over industry and havent for 40 years, a human can still run rings around robotic machines. in fact excel back in the 80s when i was working for apple was better than the current excel i have on my windows 11pc, we have gone backwards. the only difference is things look pretty to day. i used to design MRP systems that could store/process/ recover 1 million transactions from 10 different file systems on a 20 meg hard drive at lightning speeds. are we doing that today 🤔

AI is much better than me at searching through loads of sites, reading all the results and trying to sort the wheat from the chaff.

absolutly, but as my daughter says ,theres also a lot of incorrect infomation in there as well, and when studying health you cant afford misinformation, she has been given her password for medical professionals to access all the resurch papers and said AI is no where near the acuracy of resurch papers, for critical decissions regarding health they have to make jugement for them selves.

-

@AlanSh has written:

Just read this:

Alan

It's worth reading the article to see just how bad ai can be at creating supposedly valid representations. You would think that in the field of law the ai would be more likely to get it right.

If you do read the article it's worth a quick browse of the comments. A couple that caught my eye:"People do not understand that LLMs (I refuse to call them AIs) are just tools to help you save time. They're not miracles. You have to think of them as extremely junior assistants who know millions of books by heart but do not understand the knowledge. You, as a human, must do your due diligence to check the work of the LLM as you would with a junior assistant."

And a response to the above:

'Agreed. They’re basically autocomplete apps that guess what the next word will be based on probabilities. Turns out that those can be very good guesses when you use a big chunk of the internet to train them, but there isn’t any “thinking” going on.'

The important misconception about ai is that it can "think". It can't think. It can only create a supposition based on the material it has at it's disposal and any level of correctness assumes that the algorithms apportion correct weight to each piece of data. All that can happen is that the system is trained to improve that weight allocation and even if it has it's own feedback loop to correct itself (and that assumes it is capable of realising it is wrong), that is still not thinking.

And that's why I hate the term ai. It's not really intelligence. It's just adding more complex layers to problem solving which, in the computer world, were always hand coded explicit instructions. So each layer requires ever more complex levels of explicit instructions - that is not thinking as we know it - as we are capable of.

-

@Bryan has written:

And that's why I hate the term ai. It's not really intelligence. It's just adding more complex layers to problem solving which, in the computer world, were always hand coded explicit instructions. So each layer requires ever more complex levels of explicit instructions - that is not thinking as we know it - as we are capable of.

The thing is, we don't really have a good working definition of "intelligence" from the start. Most work from the proposition that "I don't know what it is, but I know it when I see it." However, the current AIs (LLMs, if you prefer) are unable to change once trained. The next gen AIs will be able to learn and alter their own programming. That will be a game changer.