I don't really know how that will work or how effective it could / will be.

I consider the massively parallel network of our brains to computer networks. There are up to 100 billion neurons in our brain. Each neuron has an average of 1,000 synapses (connections to other neurons) but there can be up to 200,000. If we consider a neuron as storing a piece (bit / byte whatever) of data (a poor analogy but I don't have another), how does that compare to a computer system? In the computer world we can store an incredible amount of data. But how connected to each storage site is that data? In computer storage memory there is no connection between stored data other than retrieving the data we want to processor memory and acting on it there. Processor memory is quite limited in size compared to storage memory. We can network many processors together but what is the physical limit? We cant connect anywhere near as many processors together in a massively parallel sense as neurons are connected. We can make and network groups of processors and then network those groups together and on and on and get something quite large and distributed. But there always needs to be control layers that dictate what all these connections are doing. Those control layers grow exponentially the more we try and expand the network. Every process (from data retrieval to processing in local groups, to processing in larger groups, to the layered control that is coordinating the whole thing) that is occurring in this system can only do so via explicit instructions. Each process produces an outcome. Then higher level control can group lower level outcomes. Each step requires a branch in the code / explicit instructions. Now it is possible to keep adding layers that branch control giving higher level outcomes. At some point we may have a required outcome or we may need to go back to some prior point and reprocess with some other parameters (which requires branches in the code). Once we have an outcome it is possible to store it so we don't need to recalculate it. That step may be considered by some as learning. The system could store many outcomes. What happens when we want to retrieve a stored outcome? Another control layer has to decide which relevant outcomes are the answer or solution to retrieve - once again explicit instructions. We can grow the system as large as we like (as is possible given size and power constraints - note they are already talking about the large amounts of electrical power these "ai" systems are using) but sitting over the top of it there is always a control layer that operates from explicit instructions. The uppermost layer can't learn - to change it needs some external input.

All I am trying to say is that it is physically impossible to create a computer system that can mimic the thinking / learning capacity of our brains. Contrary to what Hollywood would have us believe - that Skynet became self aware (another whole level of consciousness above a networked system requiring sensory input) - it just isn't physically or computationally possible.

The media love it because it fuels their existence. Some academics love it because it gives them cause or relevance. The gullible suck it up and just like a conspiracy theory it grows its own momentum until at some point the wheels fall off when reality finally hits home.

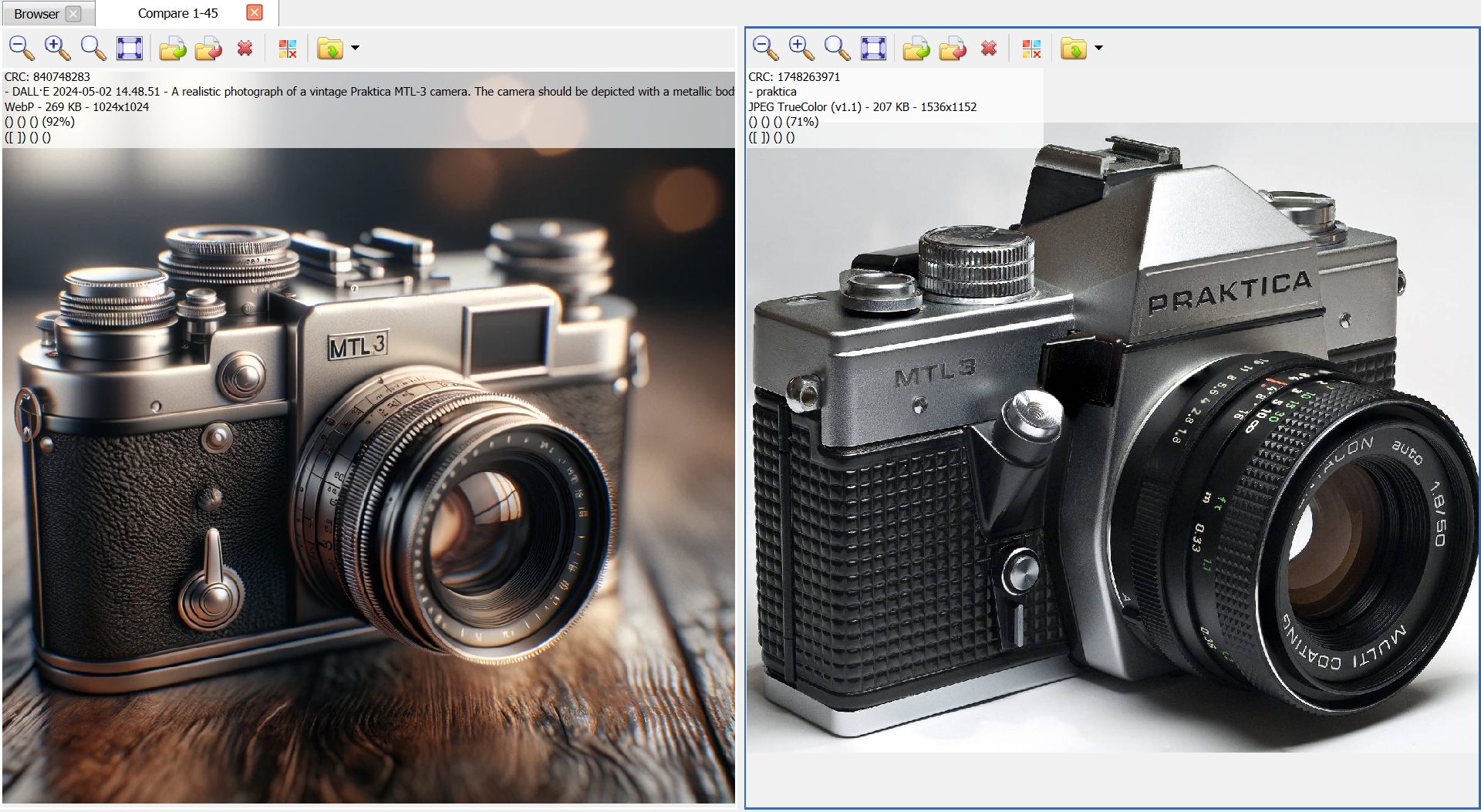

Now that's not to say that a system couldn't generate images on demand but I am struggling to see how a system could realistically generate the whole gamut of potential images we all capture. It may give me an eagle in flight but how big would the system need to be to give me many variations on all birds / wildlife / flowers / landscapes / cityscapes etc? It would require a ridiculous amount of stored images / data to draw from and then it needs the ability to present what it creates in a realistic / plausible way...

Phew...😬