Does anyone know if this method of noise reduction could be added to the a93 for stills via a firmware update ? the a93 can shoot at 120 frames per sec so what if the camera shot at 40 frames per sec with advanced noise reduction 😉

-

-

I would guess that only Sony can answer this with any authority.

-

theres a few guys on this forum that could answer this question

-

Why should they bother to open the can of motion artifacts?

So just shoot a burst at 120fps on the A9.3 and apply temporal denoising in post.

-

why not just shoot in single shot mode where 3 frames are mashed together in camera for cleaner images 🙄

-

Recent OM system cameras do something alike in single shot mode:

2|4|...128 frames squeezed into a single image

It's called "LiveND". -

live ND is just replicating a nd filter by blurring say a water fall or the ocean, i had that on my em1mk2

-

Nope.

Em1.2 doesn't have liveND feature at all, it was introduced with em1x and later high end oly cams.learnandsupport.getolympus.com/learn-center/photography-tips/browse-tips-by-camera-feature/live-nd-mode has written:You can find Live ND Mode on the these cameras.

OM SYSTEM OM-1 OM-D E-M1 Mark III OM-5 OM-D E-M1XAnd also om1.2 🙂

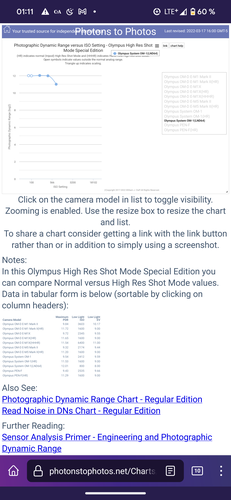

Here you can see what it does to DR (LND64=64 exposures combined, more DR and less noise):photonstophotos.net/Charts/PDR_HighResShotMode.htm has written: -

@AlanSh has written:

I would guess that only Sony can answer this with any authority.

Edit: I thought Donald switched to video in his second sentence, so I answered for video, and this isn't directly relevant to his query, but I'll leave it here.

It is up to Sony what is done in-camera, but anyone can process the frames in post-processing, including changing the fps with temporal resampling. The only problem is, most cameras that I am aware of do not record audio above 60fps, because of the assumption that higher fps will be for slow-motion playback. So, 120fps video is typically coded to play back at 60fps, without audio. That was a very short-sighted standard, IMO. One of my laptops has a 360Hz display, and can play 120fps near-flawlessly, averaging 3 screen frames duration for each video frame, mostly 3, with the occasional 2 or 4 when things fall out of sync,without dropping any frames completely.

So, Donald's video would be fine at 120fps on my laptop; it might benefit a little from further noise reduction (either spatial or temporal), but if it is going to be played on someone's 4K 30Hz monitor, quick-and-dirty media players will drop about every 3 out of 4 frames, making the video choppy with a low shutter angle and there will be an increase in apparent noise, so for that display, you want a smart/efficient media player that can resample the fps in real time if hardware allows, or you want to do that in editing, and create a 30fps version of the image. On a 60Hz display, a 120fps video may drop every other frame, playing odd frames for a while until it gets out of sync and then it will do a run of even frames, etcetera, and it will be choppy unless the shutter angle was about 360 degrees, and will be noisier than on a >120Hz display, if there is no noise reduction or fps (proper/weighted) downsampling.

Unfortunately, "the best thing to do" with video does not have a universal answer with current standards, and depends on where the video is going to be played. It's kind of analogous to giving out your stills to random software; if the software used nearest neighbor to downsample, the higher the resolution the file you give out, the more it will be made nosier and more distorted by nearest neighbor. With software that properly resamples, though, it is best to supply full resolution. A video player that "drops frames" to play at a lower rate for the display is like a temporal "nearest neighbor".

-

@DonaldB has written:@finnan has written:

Why should they bother to open the can of motion artifacts?

So just shoot a burst at 120fps on the A9.3 and apply temporal denoising in post.

why not just shoot in single shot mode where 3 frames are mashed together in camera for cleaner images 🙄

If you're talking about bursts of stills, then either of two things could happen if your wish was granted. Either the camera just stacks the 3 frames dumbly, in which case, if the camera is moving or a subject is moving, you will get artifacts with 3 ghosts, or the camera intelligently aligns the frames, in which case subject motion may still ghost, even if camera motion is corrected. That latter is computation-intensive, and may put a damper on the framerate, making even 40 output frames per second impossible.

Sure, I'd like to see cameras help us by stacking in-camera, but it isn't the most flexible way to do that, and stacking isn't always very beneficial. Sometimes, most things are better just by taking longer exposures for more exposure. If you're already at base ISO and want to go lower, stacking can do that in-camera. I think an old Kodak did just that and had ISO 6, but of course, it probably did not do anything like "bursting" such images. Again, exposure is basically like this with short exposures:

___ ___ ___ and like this with long exposures: _________________________ _________________________ _________________________so, you will get artifacts accordingly, with motion. Not only longer blurs, but hyphenated ones.

-

thanks, i can see even at 1/40 sec there is still going to be movement in the frame and stacking will have the same artifacts as the olympus cameras that ive had in the past in hi res mode.

-

@finnan has written:

Recent OM system cameras do something alike in single shot mode:

2|4|...128 frames squeezed into a single image

It's called "LiveND".It's nice that they offer that, but I would say that it isn't simulating ND, but rather, creating lower ISOs that have some motion and total duration caveats. If each exposure is ISO 200, then a stack of 128 of them is actually ISO 200/128 = ISO 1.5625. If 128 frames are each ISO 25,600, then the combined output is ISO 200. The latter seems to make no sense when you could have shot at ISO 200 to begin with, without the potential motion artifacts of hyphenation and a longer overall exposure time due to the blackout time between frames, but it does greatly reduce post-gain read noise, which is a major obstacle to max DR with current sensors.

Photon noise is the same, pre-gain read noise is basically the same except if there is a dual-conversion-gain difference in the per-frame ISOs, but any fixed-pattern noise gets clearer as other noises drop to very low levels, and fixed-pattern noise is what rots the gold in the pot at the end of the stacked-exposure rainbow. You would need to pixel-shift the sensor at offsets as large as the largest bands or blotches of fixed-pattern noise to eliminate it almost completely. So, actually, a long pixel-shift session would actually be very beneficial to stacked exposure. One could go the route of subtracting a stack of blackframes from the stack of exposures without pixel shift, but that only corrects blackpoint-related fixed pattern noise, and does not address the PRNU noise which only occurs with signal (it is a scalar noise - every pixel has slightly different sensitivity).

ND filters waste light; stacked bursts do not lose any light except what is always lost to the CFA. This is another case of a false sense of correlation leading to the misnaming of something. The need was to be able to record motion blur on bright daylight with low f-ratios. ND filters are what people traditionally have reached for to do that, and so a manufacturer does something else that gives the same end effect, and the term "ND" is erroneously used. People wouldn't need ND filters for blurred waterfalls on bright days, if their cameras could take more exposure by having lower ISOs without loss of effective QE.

Of course, you always need enough bit depth in your output image from a stack, otherwise, it will posterize unless you add some dither noise before final quantization to 14 or 16 bits. Added Dither noise can be very efficient, though, and do its job of preventing posterization without creating visible noise, because artificial noise can be used that has no low-frequency content.

-

@JohnSheehyRev has written:

Edit: I thought Donald switched to video in his second sentence, so I answered for video, and this isn't directly relevant to his query, but I'll leave it here.

To my credit, I just realized where I got the idea that this was about video; the word "video" was in the Subject line of the thread.