HAND.

-

-

Some people are starting to call him out on what looks like a flawed test..

Just another load of bullshit on that forum, from guys (always guys) who want to prove their system is better than the rest, and make up some dubious test to prove the point.

Yes, you get banned for calling out bullshit on that forum.

-

@DonaldB has written:

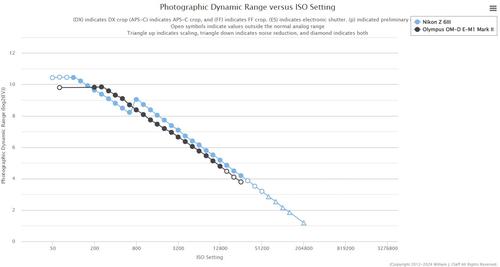

me too, i shoot out door portraits and need the cleanest skin tones in shadows i can get. the z6iii is behind my old em1mk2 for my most common iso, 🤨 its why i switched to FF in the first place.

PDR is not normalized for exposures. As DXOMarkshows, that Olypus ISO 200 behaves like ISO 100 on most other cameras (of other brands), including Canon, Nikon and Sony. You should basically move that Olympus graph one stop to the left to get more objective results.

-

@PeteW has written:

This is a bit of a thread diversion prompted by your screenshot from PTP, but an interesting thread has just started on DPR calling some of Bill Claff's PTP results into question:

Link: www.dpreview.com/forums/thread/4767164

Thread is titled: How reliable are “Photons to Photo” data? G9 vs Canon 6DMII

The problem with DR in general is that people in mostly don't know what it means - it's not a direct measure of image quality, but meany think that it is, confusing it with signal to noise.

The problem with PDR in particular is that it's not at all clear what it's measuring and why. -

@NCV has written:@finnan has written:@NCV has written:@finnan has written:@NCV has written:@PeteW has written:@DonaldB has written:

me too, i shoot out door portraits and need the cleanest skin tones in shadows i can get. the z6iii is behind my old em1mk2 for my most common iso, 🤨 its why i switched to FF in the first place.

This is a bit of a thread diversion prompted by your screenshot from PTP, but an interesting thread has just started on DPR calling some of Bill Claff's PTP results into question:

Link: www.dpreview.com/forums/thread/4767164

Thread is titled: How reliable are “Photons to Photo” data? G9 vs Canon 6DMII

An interesting diversion. I cannot ask the question there as Caldwell will instantly ban me, but why was the the G9 picture exposed more by 1.73 stops? Surely we want to see the dynamic range with the cameras both set to a certain ISO and exposed at the same shutter speed and aperture, to have the same lightness.

Comparing a 2017 camera with a 2023 camera was a typical DPR forum trick to make a certain system look better.

Maybe sombody with more knowledge of the theoretical side can help out.

You should read Serguei Palto's post, he also explained why he didn't use 2 stops difference like an equivalent photo would require.

Oh, and you apparently also missed that Serguei Palto used a LUMIX G9 Mark I (a January 2018 camera).

Quote from link:

"I have decided to make this post after recent results published at “Photons to Photos” (PTP) on measurements of Dynamic Range (DR) of the latest Lumix G9 Mark II camera, which are in strong disagreement not only with my measurements, but also with real life observations of many G9II users."

He tested the Mk2.

Try again. There he talks about his motivation, not his test.

The G9.2 has 25MP, not 20MP like the original G9@NCV has written:His test method is wrong then. Logic tells me that I must start with two pictures of the same lightness at the same ISO to test the dynamic range.

Smells of the usual DPR forum cherry picking.

Not nice.

I understand why you get banned on DPR.Some people are starting to call him out on what looks like a flawed test..

Just another load of bullshit on that forum, from guys (always guys) who want to prove their system is better than the rest, and make up some dubious test to prove the point.

Yes, you get banned for calling out bullshit on that forum.

It's an interesting pair of protagonists. Prof Palto has, in my experience, a very doctrinaire view of what is 'science', plus a somewhat shameless tendency to bend that 'science' in the interests of his favoured camera format. As a producer of neutral unbiassed data, I'd go with Bill Claff over Dr Palto every time. On the other hand, Bill's 'PDR' metric is problematic. It's not at all clear just what it is supposed to measure, because it certainly sin't what is generally considered to be 'dynamic range'. The problem isn't that the results are 'wrong', it's just that one doesn't know exactly what they are supposed to indicate.

Going through the discussion, there's lots of nonsense being talked, for instance, from Palto:

Quoted message:The use of the monitor is enough to not consider PDR seriously, because RGB space is nonlinear (it is gamma-space), while DR assumes the work with the linear data.

So many useless steps which are sources for serious errors....:)

He's made so many silly mistakes here. For a start, the fact that monitors are given so-called 'gamma space' data doesn't mean that the display is non-linear. Gamma coding was originally called 'gamma correction' and was done to counter the non-linear response of a CRT, resulting in a linear output. Modern LCD or OLED monitors should digitally de-gamma to produce the required linear output. Then, even were it true that the monitor is non-linear, it is not necessary to have a linear set of tone patches to measure DR. All you need to know is how bright is each patch, and this can be calculated from the photon count, which in turn can be estimated from the SNR. Frankly, as usual, Palto hasn't a clue what he's talking about. But that still doesn't mean that PDR is a meaningful metric.

-

@bobn2 has written:@NCV has written:@finnan has written:@NCV has written:@finnan has written:@NCV has written:@PeteW has written:@DonaldB has written:

me too, i shoot out door portraits and need the cleanest skin tones in shadows i can get. the z6iii is behind my old em1mk2 for my most common iso, 🤨 its why i switched to FF in the first place.

This is a bit of a thread diversion prompted by your screenshot from PTP, but an interesting thread has just started on DPR calling some of Bill Claff's PTP results into question:

Link: www.dpreview.com/forums/thread/4767164

Thread is titled: How reliable are “Photons to Photo” data? G9 vs Canon 6DMII

An interesting diversion. I cannot ask the question there as Caldwell will instantly ban me, but why was the the G9 picture exposed more by 1.73 stops? Surely we want to see the dynamic range with the cameras both set to a certain ISO and exposed at the same shutter speed and aperture, to have the same lightness.

Comparing a 2017 camera with a 2023 camera was a typical DPR forum trick to make a certain system look better.

Maybe sombody with more knowledge of the theoretical side can help out.

You should read Serguei Palto's post, he also explained why he didn't use 2 stops difference like an equivalent photo would require.

Oh, and you apparently also missed that Serguei Palto used a LUMIX G9 Mark I (a January 2018 camera).

Quote from link:

"I have decided to make this post after recent results published at “Photons to Photos” (PTP) on measurements of Dynamic Range (DR) of the latest Lumix G9 Mark II camera, which are in strong disagreement not only with my measurements, but also with real life observations of many G9II users."

He tested the Mk2.

Try again. There he talks about his motivation, not his test.

The G9.2 has 25MP, not 20MP like the original G9@NCV has written:His test method is wrong then. Logic tells me that I must start with two pictures of the same lightness at the same ISO to test the dynamic range.

Smells of the usual DPR forum cherry picking.

Not nice.

I understand why you get banned on DPR.Some people are starting to call him out on what looks like a flawed test..

Just another load of bullshit on that forum, from guys (always guys) who want to prove their system is better than the rest, and make up some dubious test to prove the point.

Yes, you get banned for calling out bullshit on that forum.

It's an interesting pair of protagonists. Prof Palto has, in my experience, a very doctrinaire view of what is 'science', plus a somewhat shameless tendency to bend that 'science' in the interests of his favoured camera format. As a producer of neutral unbiassed data, I'd go with Bill Claff over Dr Palto every time. On the other hand, Bill's 'PDR' metric is problematic. It's not at all clear just what it is supposed to measure, because it certainly sin't what is generally considered to be 'dynamic range'. The problem isn't that the results are 'wrong', it's just that one doesn't know exactly what they are supposed to indicate.

Going through the discussion, there's lots of nonsense being talked, for instance, from Palto:

Quoted message:The use of the monitor is enough to not consider PDR seriously, because RGB space is nonlinear (it is gamma-space), while DR assumes the work with the linear data.

So many useless steps which are sources for serious errors....:)

He's made so many silly mistakes here. For a start, the fact that monitors are given so-called 'gamma space' data doesn't mean that the display is non-linear. Gamma coding was originally called 'gamma correction' and was done to counter the non-linear response of a CRT, resulting in a linear output. Modern LCD or OLED monitors should digitally de-gamma to produce the required linear output. Then, even were it true that the monitor is non-linear, it is not necessary to have a linear set of tone patches to measure DR. All you need to know is how bright is each patch, and this can be calculated from the photon count, which in turn can be estimated from the SNR. Frankly, as usual, Palto hasn't a clue what he's talking about. But that still doesn't mean that PDR is a meaningful metric.

Thanks for a lucid explanation of this test and the problems of establishing dynamic range indications. I was aware that Claff's graphs are in fact not easy to interpret properly. If I understand it correctly, the cameras interpretation of ISO is not taken into account. I remember my EM5 had different had a different idea about ISO compared to my D810, producing pictures of differing lightness at the same settings.

Most of the post was beyond my understanding of sensor engineering, but I felt something was off when the two data pictures from the different cameras displayed different lightness.

-

@bobn2 has written:@PeteW has written:

This is a bit of a thread diversion prompted by your screenshot from PTP, but an interesting thread has just started on DPR calling some of Bill Claff's PTP results into question:

Link: www.dpreview.com/forums/thread/4767164

Thread is titled: How reliable are “Photons to Photo” data? G9 vs Canon 6DMII

The problem with DR in general is that people in mostly don't know what it means - it's not a direct measure of image quality, but meany think that it is, confusing it with signal to noise.

The problem with PDR in particular is that it's not at all clear what it's measuring and why.he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate. -

@NCV has written:

Yes, you get banned for calling out bullshit on that forum.

Yes, and the moderator is usually prompted to do this because a number of forum members use the 'complain' button all too frequently.

-

-

@DonaldB has written:@PeteW has written:@NCV has written:

Yes, you get banned for calling out bullshit on that forum.

Yes, and the moderator is usually prompted to do this because a number of forum members use the 'complain' button all too frequently.

not members but sales reps 😁

No. Unpaid sales reps

-

And some members sitting on the side lines as well 😊

I'll leave you guys to it. Carry on. 😎 👍

-

@NCV has written:@DonaldB has written:@PeteW has written:@NCV has written:

Yes, you get banned for calling out bullshit on that forum.

Yes, and the moderator is usually prompted to do this because a number of forum members use the 'complain' button all too frequently.

not members but sales reps 😁

No. Unpaid sales reps

i wouldnt be trading your z7 for the latest z6iii. just watched another utube from a wildlife photographer shooting birds. the z63 doesnt bird eye af it actually focuses on the head 🤔 i was there with my a74 with bird eye af shooting the computer monitor my eye af is a tiny box that fits over the eye itself and was sticky as, thats what you get with 800 focus points compared to 270. lots of porkies advertized with the z63. reviewers are keeping there z7 cameras over it.

-

@DonaldB has written:@NCV has written:@DonaldB has written:@PeteW has written:@NCV has written:

Yes, you get banned for calling out bullshit on that forum.

Yes, and the moderator is usually prompted to do this because a number of forum members use the 'complain' button all too frequently.

not members but sales reps 😁

No. Unpaid sales reps

i wouldnt be trading your z7 for the latest z6iii. just watched another utube from a wildlife photographer shooting birds. the z63 doesnt bird eye af it actually focuses on the head 🤔 i was there with my a74 with bird eye af shooting the computer monitor my eye af is a tiny box that fits over the eye itself and was sticky as, thats what you get with 800 focus points compared to 270. lots of porkies advertized with the z63. reviewers are keeping there z7 cameras over it.

Most of my subject matter has been standing still for up to a thousand years. I have got all the time in the world to focus.

-

@DonaldB has written:

he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.It's a more systematic than 'what he considers acceptable' but the choice ends up pretty meaningless. His lower limit comes from an old standard of the SNR of a 'good' image should be, but that's for the highlights of an image and he applies it to the shadows. When you think about it, you're never going to have fine detail in the deepest shadows, so in fact the SNR there can be very low - 2:1 is quite acceptable in my experience. Then he normalises it based on the normal CoC for DOF calculations, which ends up equivalent to about 1MP, which really isn't the kind of resolution people are happy with these days. The high lower bound gives a very low figure, the low resolution cancels that out and makes the figures looks reasonable, but purely by accident.

My main issue really is the first thing to do before inventing a metric is to decide what it will tell you, and Bill hasn't done that. I don't think PDR tells you anything very useful. I haven't looked into Justin Abbott's but I don't see why you'd want to include colour in the lower bound, the deep shadows are usually desaturated, just because of the nature of black.@DonaldB has written:but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.

I think Bill's measurements measure 'PDR' pretty accurately. His method has quite a few advantages, like giving multiple data points from multiple cameras. The real problem is whether or not 'PDR' is something you want to measure.

-

@bobn2 has written:@DonaldB has written:

he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.It's a more systematic than 'what he considers acceptable' but the choice ends up pretty meaningless. His lower limit comes from an old standard of the SNR of a 'good' image should be, but that's for the highlights of an image and he applies it to the shadows. When you think about it, you're never going to have fine detail in the deepest shadows, so in fact the SNR there can be very low - 2:1 is quite acceptable in my experience. Then he normalises it based on the normal CoC for DOF calculations, which ends up equivalent to about 1MP, which really isn't the kind of resolution people are happy with these days. The high lower bound gives a very low figure, the low resolution cancels that out and makes the figures looks reasonable, but purely by accident.

My main issue really is the first thing to do before inventing a metric is to decide what it will tell you, and Bill hasn't done that. I don't think PDR tells you anything very useful. I haven't looked into Justin Abbott's but I don't see why you'd want to include colour in the lower bound, the deep shadows are usually desaturated, just because of the nature of black.@DonaldB has written:but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.

I think Bill's measurements measure 'PDR' pretty accurately. His method has quite a few advantages, like giving multiple data points from multiple cameras. The real problem is whether or not 'PDR' is something you want to measure.

Is there anybody who presents meaningful data, that is easy to interpret, concerning how much dynamic range our cameras have?

-

@bobn2 has written:@DonaldB has written:

he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.It's a more systematic than 'what he considers acceptable' but the choice ends up pretty meaningless. His lower limit comes from an old standard of the SNR of a 'good' image should be, but that's for the highlights of an image and he applies it to the shadows. When you think about it, you're never going to have fine detail in the deepest shadows, so in fact the SNR there can be very low - 2:1 is quite acceptable in my experience. Then he normalises it based on the normal CoC for DOF calculations, which ends up equivalent to about 1MP, which really isn't the kind of resolution people are happy with these days. The high lower bound gives a very low figure, the low resolution cancels that out and makes the figures looks reasonable, but purely by accident.

My main issue really is the first thing to do before inventing a metric is to decide what it will tell you, and Bill hasn't done that. I don't think PDR tells you anything very useful. I haven't looked into Justin Abbott's but I don't see why you'd want to include colour in the lower bound, the deep shadows are usually desaturated, just because of the nature of black.@DonaldB has written:but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.

I think Bill's measurements measure 'PDR' pretty accurately. His method has quite a few advantages, like giving multiple data points from multiple cameras. The real problem is whether or not 'PDR' is something you want to measure.

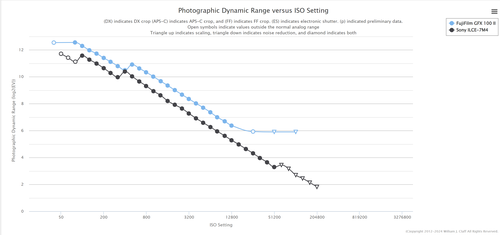

the problem with bills tests is they are actually wrong in some cases. if you download the raws from dustin abbotts site of the gfx 100 and the a7iv the a7iv clearly has the highest DR performance, and it should the pixels are larger. yet bills test show the MF has 1 stop higher which is false.

-

@NCV has written:@bobn2 has written:@DonaldB has written:

he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.It's a more systematic than 'what he considers acceptable' but the choice ends up pretty meaningless. His lower limit comes from an old standard of the SNR of a 'good' image should be, but that's for the highlights of an image and he applies it to the shadows. When you think about it, you're never going to have fine detail in the deepest shadows, so in fact the SNR there can be very low - 2:1 is quite acceptable in my experience. Then he normalises it based on the normal CoC for DOF calculations, which ends up equivalent to about 1MP, which really isn't the kind of resolution people are happy with these days. The high lower bound gives a very low figure, the low resolution cancels that out and makes the figures looks reasonable, but purely by accident.

My main issue really is the first thing to do before inventing a metric is to decide what it will tell you, and Bill hasn't done that. I don't think PDR tells you anything very useful. I haven't looked into Justin Abbott's but I don't see why you'd want to include colour in the lower bound, the deep shadows are usually desaturated, just because of the nature of black.@DonaldB has written:but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.

I think Bill's measurements measure 'PDR' pretty accurately. His method has quite a few advantages, like giving multiple data points from multiple cameras. The real problem is whether or not 'PDR' is something you want to measure.

Is there anybody who presents meaningful data, that is easy to interpret, concerning how much dynamic range our cameras have?

9 stops i get shooting a peice of paper. 😁 have no idea where 15 comes from 🤨

-

@DonaldB has written:@NCV has written:@bobn2 has written:@DonaldB has written:

he measures DR based on noise and what he considerers aceptable, no account for colour in acuracy. which is why i like Dustin Abbotts DR tests.

but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.It's a more systematic than 'what he considers acceptable' but the choice ends up pretty meaningless. His lower limit comes from an old standard of the SNR of a 'good' image should be, but that's for the highlights of an image and he applies it to the shadows. When you think about it, you're never going to have fine detail in the deepest shadows, so in fact the SNR there can be very low - 2:1 is quite acceptable in my experience. Then he normalises it based on the normal CoC for DOF calculations, which ends up equivalent to about 1MP, which really isn't the kind of resolution people are happy with these days. The high lower bound gives a very low figure, the low resolution cancels that out and makes the figures looks reasonable, but purely by accident.

My main issue really is the first thing to do before inventing a metric is to decide what it will tell you, and Bill hasn't done that. I don't think PDR tells you anything very useful. I haven't looked into Justin Abbott's but I don't see why you'd want to include colour in the lower bound, the deep shadows are usually desaturated, just because of the nature of black.@DonaldB has written:but in all fairness if Bill uses the same test for all cameras then you compareing them based on the same objectives irrelevent if the numbers are not accurate.

I think Bill's measurements measure 'PDR' pretty accurately. His method has quite a few advantages, like giving multiple data points from multiple cameras. The real problem is whether or not 'PDR' is something you want to measure.

Is there anybody who presents meaningful data, that is easy to interpret, concerning how much dynamic range our cameras have?

9 stops i get shooting a peice of paper. 😁 have no idea where 15 comes from 🤨

Probably because the reflectance range of the paper white to the darkest patch of ink (toner? photographic silver?) is no more than that. The best Kodak reflectance gray scale strips I have measured were about 10 stops. A greater range requires trans-illuminated materials and methods.

**Edit: Oops, sorry. Memory is a terrible thing. The Kodak standard has a maximum usable density range of 1.9 OD (Optical Density). OD is a logarithmic scale, so 0.3 OD is equivalent to halving or doubling of the light value. That gives the Kodak reflectance scale about a 6-1/3 stop range (1.9/0.3). Measuring a 9 stop range off a piece of paper sounds excessive.

The natural environment contains a potential range of optical densities far in excess of 15 stops.

Rich