the 90d is embarrising compared to the d30, i just put the test images side by side. the market is about selling cameras more is better so they keep telling us. im not drawn into being brain washed.

edit: your right the d90 is better than the 6D

-

-

@DonaldB has written:@DanHasLeftForum has written:@DonaldB has written:

totally irrelevent to the comparrision i have always been chasing. it proves my point that larger pixels produce smoother and more defined colour graduations than smaller pixels irrelevent of sensor size.

It doesn't prove anything because the pixel size on the sensor is certainly not the main, if at all, contributor because the quality of the color graduations from my 90D are noticeably better than on my 600D (larger pixels than on 90D).

the 90d is embarrising compared to the d30, i just put the test images side by side. the market is about selling cameras more is better so they keep telling us. im not drawn into being brain washed.

edit: your right the d90 is better than the 6DYou have your cameras mixed up.

I am talking about 2 Canon cameras, 90D and 600D.

In any case, larger sensor pixels is not the main reason for better color graduations in my experience as described earlier.

-

no one willing to play anymore 😎 or did everyone find the golden egg from the d30 😛

-

@DonaldB has written:

no one willing to play anymore 😎 or did everyone find the golden egg from the d30 😛

The gold-painted plastic egg is that in areas that have the same color and intensity contiguously over a significant area, the low pixel density results in less depth modulation from noise in the raw capture. IF you wanted your capture to scale at exactly 100%, then the large pixels are doing some work for you, as you require no further math to get to the displayed size that you want. The problem is, if you want to show the image at a display size where you need 72% or 137% or 300%, now you have problems, because that pixel-level sharpness that the large pixels gave you is aliased and is fragile, and does not resample very well to 72% or 137% as you either need to use a method that softens or distorts fine detail, and at 300%, there will be pixelation. You could use AI upsizing, but if your going to do AI, then you could do AI noise reduction from a higher pixel density, which will give more realistic results than AI upsampling from an aliased source image.

The bigger pixels are more wrong about what is actually in the scene than smaller pixels, even if they create a look that you like. Just because it looks smooth, that does not mean it is correct. "Correct" is actually rough, like that simulation I made of what an analog capture of a fine white line against a black background would look like, which you ignored, in another thread. There is no need to bake in any desired smoothing into the raw capture, other than the side effect of smaller files or a potentially faster rolling shutter with lower pixel density (but the D30 never uses e-shutter alone). There are multiple ways to do that presentation-level smoothing with higher pixel densities, and those higher densities allow greater flexibility in resampling at arbitrary ratios, with minimal resampling artifacts.

So, a low pixel density may be just perfect for a specific displayed size that could not show any more detail, anyway, and if you show it at 100% it may look great to you, but that is only great if that is exactly the size you want to show it at. Even shrinking it to 72% would introduce artifacts in areas with sharp edges.

-

@JohnSheehyRev has written:@DonaldB has written:

no one willing to play anymore 😎 or did everyone find the golden egg from the d30 😛

The gold-painted plastic egg is that in areas that have the same color and intensity contiguously over a significant area, the low pixel density results in less depth modulation from noise in the raw capture. IF you wanted your capture to scale at exactly 100%, then the large pixels are doing some work for you, as you require no further math to get to the displayed size that you want. The problem is, if you want to show the image at a display size where you need 72% or 137% or 300%, now you have problems, because that pixel-level sharpness that the large pixels gave you is aliased and is fragile, and does not resample very well to 72% or 137% as you either need to use a method that softens or distorts fine detail, and at 300%, there will be pixelation. You could use AI upsizing, but if your going to do AI, then you could do AI noise reduction from a higher pixel density, which will give more realistic results than AI upsampling from an aliased source image.

The bigger pixels are more wrong about what is actually in the scene than smaller pixels, even if they create a look that you like. Just because it looks smooth, that does not mean it is correct. "Correct" is actually rough, like that simulation I made of what an analog capture of a fine white line against a black background would look like, which you ignored, in another thread. There is no need to bake in any desired smoothing into the raw capture, other than the side effect of smaller files or a potentially faster rolling shutter with lower pixel density (but the D30 never uses e-shutter alone). There are multiple ways to do that presentation-level smoothing with higher pixel densities, and those higher densities allow greater flexibility in resampling at arbitrary ratios, with minimal resampling artifacts.

So, a low pixel density may be just perfect for a specific displayed size that could not show any more detail, anyway, and if you show it at 100% it may look great to you, but that is only great if that is exactly the size you want to show it at. Even shrinking it to 72% would introduce artifacts in areas with sharp edges.

everything is a compromise, but even images from my old 6 meg k100d looked great, what today brings is better AF well at least on some cameras others are still back in the stone age.

what was exposed out of this thread is biggers pixels record more acurate voltage steps period. which ive been saying for years.

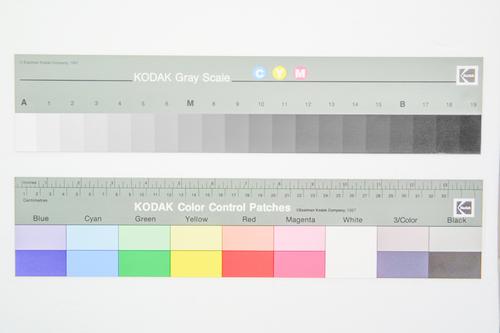

here are 2 images latest MF and the d30 both images pushed to display seperation of tones.

what amased me was using the colour picker in Ps some modern cameras couldnt even seperate the tones 🤨in fact the left sample was darker the right 🤔 -

@DonaldB has written:

no one willing to play anymore 😎 or did everyone find the golden egg from the d30 😛

Anyone interested can do their own testing and come to their own conclusions like you did.

My experience with different sized pixels on sensors tells me that size of the sensor pixels is not the main driver of the quality of color graduations as shown between my 90D and 600D with the 90D having noticeably better color graduations than the 600D.

-

@DanHasLeftForum has written:@DonaldB has written:

no one willing to play anymore 😎 or did everyone find the golden egg from the d30 😛

Anyone interested can do their own testing and come to their own conclusions like you did.

My experience with different sized pixels on sensors tells me that size of the sensor pixels is not the main driver of the quality of color graduations as shown between my 90D and 600D with the 90D having noticeably better color graduations than the 600D.

the dodgy thing about this finding ,is if you go to the pentax k100d 6 meg apsc review test images its absolutely pathetic. camera manufacturers have deliberately held back the tech purly to drive sales each year with little increments at a time in image quality. but they hit a brick wall with cameras like the Z8 and the a7iv probably the R5 as well. you see off beat threads by morons 😆 always bring new life to discussions. its called "open thinking"

-

People can do their own testing and come to their own conclusions.

-

-

@DonaldB has written:

i prompt anyone to download a D30 image and push the shadows and pul back highlights with a jpeg and then upscale 3x ,the files blow our raws away 😃

ive never owned a canon dslr but i want one of these , insane image quality.

-

-

@AlanSh has written:

...Not sure what a D30 is.

Alan

-

@DanHasLeftForum has written:

People can do their own testing and come to their own conclusions.

They can, but the chances of any random individual doing tests as equitable and meaningful as is possible is low. The two Kodak test images Don posted say nothing about anything, other than the fact that these cameras had lenses and sensors. No mention of how these images were exposed, how and why they came to exist at the size they are shown, etc. It looks like he normalized the size of the Kodak test strips, cropped from other people's images, as if that was any kind of useful comparison.

His original assertion that I replied to was that the D30 at 3.1MP had better "gradation" than the 90D/R7/M6-II 32.5MP Canons of the same sensor size, which is true only in photon-limited tonal ranges and ISOs, but the camera is only doing something with its big pixels that can be better done with more flexibility with the newer 32.5MP sensor. He seems to be focused on the fact that the Z-axis depth of variation between one pixel value and its neighbor in the original pixel resolution is lower on the 3.1MP sensor, and that is absolutely true, but also mostly irrelevant, because you can filter the outliers away with the 32.5MP version, and it will still have far more detail, and far less artifacts than the 3.1MP version of the same shot. The 90D sensor has about double the quantum efficiency of the D30 sensor, and it has STOPS less visible read noise in a normalized image size both at higher ISOs and in the shadows of base ISO.

"Make the reality of discrete Poisson statistics go away from my eyes and I will crown you the better sensor" seems to be his game (although he wouldn't word it like that) and he only accepts it "OOC", but "OOC" through large pixels discards and distorts optical information, and is an inferior starting point for a large range of resampling needs. Those large pixels protect his eyes from outlier photon variations, but they also cause severe color aliasing, and modest luminance aliasing, both of which blow up further with arbitrary resampling.

-

I have already published several comparisons involving the Canon D30 here, in total indifference, as usual in this forum

Canon EOS D30 (2000) / Canon EOS-5D (2005) by Marc Aubry, sur FlickrKodak DCS 520 (1998) / Canon EOS D30 (2000)

www.flickr.com/photos/maoby/albums/72157678330622048

Kodak/Canon duelKodak DCS 330 (1999) and Canon EOS D30 (2000)

www.flickr.com/photos/maoby/albums/72157716160342186

Duel 3MP (1999-2000)Minolta RD-3000 (1999) / Canon EOS D30 (2000)

www.flickr.com/photos/maoby/albums/72157676052374971

Old-timers' duelNikon D1 (1999-2000) / Canon EOS D30 (2000)

www.flickr.com/photos/maoby/albums/72157676643340036

(Year One) The first Nikon/Digital CanonCanon EOS D30 (2000) / FinePix S1 Pro (2000)

www.flickr.com/photos/maoby/albums/72157666938813693

Duel 2000Canon EOS D30 (2000) / Canon EOS D60 (2002)

www.flickr.com/photos/maoby/albums/72157674364549453

Duel CanonCanon EOS D30 (2000) / Canon EOS 1D (2001)

www.flickr.com/photos/maoby/albums/72157667731061793

Duel CanonCanon EOS D30 (2000) / Canon EOS-5D (2005)

www.flickr.com/photos/maoby/albums/72157718336190221

The big firsts -

@DonaldB has written:@DonaldB has written:

i prompt anyone to download a D30 image and push the shadows and pul back highlights with a jpeg and then upscale 3x ,the files blow our raws away 😃

ive never owned a canon dslr but i want one of these , insane image quality.

Do you mean smooth pixel quality? Do you seriously believe that if the same image were taken with a 90D, and both were scaled to fit the height of the same 4K display at about 7MP, that the 90D would "be embarrassed"? Do you understand that converter defaults and Canon's DIGIC chips automatically sharpen the 90D captures, all the time, with the assumption that people want to see pixel-level details, and that this is part of the reason (and the bad part) why you see deeper outlier values in 100% pixel views? If you need pixel-level sharpness OOC (or out-of-converter) with the 32.5MP, then you may need more sharpening, but any 32.5 image downsampled to 7MP or especially 1.75MP should have ZERO sharpening at 32.5MP, and could allow HEAVY NR and still have far more detail than a 3.1MP sensor version.

If someone stuck a Canon 32.5MP sensor into the body of a D30, and the firmware ran a quick-and-dirty median filter on the data, and binned the conversion 3x3 to spoof a 3.61MP JPEG-only Foveon, and there was a "mark II" added under the the "D30" on the front of the body, I guess you would consider this the crown Jewel of APS-C cameras, even though it has only DISCARDED information.

-

@Maoby has written:

involving the Canon D30 here, in total indifference, as usual in this forum

Not total - I look at them - I just don't comment very often.

-

@DonaldB has written:@JohnSheehyRev has written:@DonaldB has written:@JohnSheehyRev has written:@DonaldB has written:

That BIGGER pixels rule 😁

www.dpreview.com/news/3829200267/world-s-largest-camera-3-1-gigapixels-for-epic-timelapse-panos-of-the-universeMy Canon D30 has about 20% bigger pixels, so it should do even better, unless pupil size combined with sensor size is what is going to get the quality.

20% bigger 🤔im not sure how 10 vers 10.5 is 20%

download a d30 3meg image and push the shadows and watch it blow the latest panasonic g9ii away

With the same area times exposure, per square inch of monitor real estate, or at totally irrelevant 100% pixel views, with the G9-II gratuitously oversharpened for the purpose of comparison to larger pixels?

totally irrelevent to the comparrision i have always been chasing. it proves my point that larger pixels produce smoother and more defined colour graduations than smaller pixels irrelevent of sensor size.

Does it bother you that math, physics and the person who invented CMOS sensors all disagree with you?

-