You can also make 16 shots with an intervalometer and average them in Photoshop.

-

-

The real reason for less noise is the larger sensor area (assuming the same exposure).

-

@DonaldB has written:

I also remember when i compared a straight out of camera shot from my a7r2 and a 8 shot hi res shot 50 meg image from my em1mk2 again the a7r2 won easily.

So you're saying that with about twice the total light, the m43-derived image was still noisier? I am not sold on the idea that you knew what you were looking at, unless there was some large-scale fixed pattern noise in the m43 that did not cancel with the pixel shifts, or the m43 50MP image was gratuitously oversharpened at the pixel level.

-

@JohnSheehyRev has written:@DonaldB has written:

I also remember when i compared a straight out of camera shot from my a7r2 and a 8 shot hi res shot 50 meg image from my em1mk2 again the a7r2 won easily.

So you're saying that with about twice the total light, the m43-derived image was still noisier? I am not sold on the idea that you knew what you were looking at, unless there was some large-scale fixed pattern noise in the m43 that did not cancel with the pixel shifts, or the m43 50MP image was gratuitously oversharpened at the pixel level.

-

@nzmacro has written:@SrMi has written:@nzmacro has written:@SrMi has written:@nzmacro has written:

For less than the cost of popcorn you could always just go

www.photopills.com/articles/exposure-photography-guide

And be done with it near enough.

Danny.

That article contains nonsense. It says that ISO represents sensor sensitivity and explains how exposure triangle works.

Well you could probably get in touch with them and let them know.

Why did you recommend it?

I can recommend a lot more if you like. Problem is we would go back and forth like the rest of this thread and that's not going to happen. If you want to correct them, contact them and let them know. It's very easy to do.

I recommend the DPR Studio Comparison Tool because it includes not stats, but stats in visible action that includes differences in read noise character.

Put any 20MP m43 up against any 20MP FF at 4x the ISO, and the m43 holds it own, especially the new Olympii. Put the Canon GX7-III (2.7x crop) against 20MP FF sensors at 8x the ISO, and the 1" sensor competes well even with the Nikon D5:

The granularity of ISOs, pixel sizes, and sensor sizes is too coarse for us to simulate the "same total light" in the 4 windows in the tool for all possible camera comparisons, but we do have the same ratios available in some cases, and when you compare same total light for the 4 windows, the visible noise is virtually independent of sensor size or pixel size, with the same amount of light. The pixel area ratios for the Canon R6, R5, and R7 are almost exactly 4:2:1, so if we use those numbers to scale the ISOs to receive the same total light per pixel, and throw in an older 20MP FF (6D), we get:

-

@DonaldB has written:

did you ever see my 1/4 sensor 10meg a7r2 studio test shots against my em1mk2 20 meg images. that theory was totally a myth in detail and noise. the 1/4 sensor shot had a slight advantage on both counts.

Based on your history, and what I could expect as a range of possibilities, I would bet that you forgot to normalize something.

I just took a look at the two cameras in the DPR studio comparison tool, and the Olympus gives much less visible read noise at high ISO with the same total light.

-

@JohnSheehyRev has written:@DonaldB has written:

did you ever see my 1/4 sensor 10meg a7r2 studio test shots against my em1mk2 20 meg images. that theory was totally a myth in detail and noise. the 1/4 sensor shot had a slight advantage on both counts.

Based on your history, and what I could expect as a range of possibilities, I would bet that you forgot to normalize something.

I just took a look at the two cameras in the DPR studio comparison tool, and the Olympus gives much less visible read noise at high ISO with the same total light.

its not rocket science to take a quater crop image of a detailed Toy doll with FF, theres no normalising anything.

shot at base iso -

@JohnMoyer has written:

This assuming same technology level. For the same number of pixels, the coarse pitch does better in low light.

Either coarse or fine pitches have advantages. I coarse pitch shows less noise per pixel at the same low illumination level. A fine pitch shows more noise per pixel and also more detail. A fine pitch on a smaller sensor costs less than a coarse pitch on a larger sensor and the larger sensor might require a more costly and heavier lens.

Now you're talking about different size sensors. The pixel pitch is not coupled to the sensor size.

-

@DonaldB has written:@JohnSheehyRev has written:@DonaldB has written:

I also remember when i compared a straight out of camera shot from my a7r2 and a 8 shot hi res shot 50 meg image from my em1mk2 again the a7r2 won easily.

So you're saying that with about twice the total light, the m43-derived image was still noisier? I am not sold on the idea that you knew what you were looking at, unless there was some large-scale fixed pattern noise in the m43 that did not cancel with the pixel shifts, or the m43 50MP image was gratuitously oversharpened at the pixel level.

In this example, the m43 image (EM...) received half the light of the FF image (DSC...).

-

@JimKasson has written:@bobn2 has written:

By and large smaller pixels have a higher voltage because they tend to have higher conversion gain.

They have higher voltage per unit charge for that reason, but I don't know of a reason why they should have higher saturation voltage, all else equal.

In a CMOS sensor the saturation is determined by the SF output swing, that in turn is determined by the supply voltage of the output circuitry. The CG is determined by floating diffusion capacitance, and will be chosen to give the design voltage swing at the highest design exposure ('base ISO') - which in the end makes it all linked - the saturation voltage in the pixel itself will be inversely proportional to the CG. Still, Don was talking about small-signal performance, as I understood it - and small pixel/high conversion gain has an 'advantage' in the voltage domain so far as that goes. I think it's the kind of 'advantage' that is purely theoretical.

-

@bobn2 has written:Quoted message:

This article contains quite a few major errors, unfortunately. Let' start with the first, try to settle that then move on. At the start you say:

What's exposure? (1)

It's the amount of light that reaches a photosensitive material (i.e. the film or your camera's sensor) to create an image.

...

What affects exposure? (3)

Three variables affect exposure: aperture, shutter speed and sensitivity (or ISO). That's what called the exposure triangle.(1) is nearly true (it's actually the amount of light per unit area, or 'density of the light' - but that's more of a quibble) but if (1) is true then (3) cannot be, because ISO has no effect on the amount of light reaching the photosensitive material. Aperture, shutter speed do, also scene luminance. So, wrong from the start. Sorry.

It's awaiting approval, let's see if they do.

Rejected. Colour me unsurprised. These purveyors of nonsense rarely enjoy having their pearls of wisdom questionsed.

-

@bobn2 has written:@bobn2 has written:Quoted message:

This article contains quite a few major errors, unfortunately. Let' start with the first, try to settle that then move on. At the start you say:

What's exposure? (1)

It's the amount of light that reaches a photosensitive material (i.e. the film or your camera's sensor) to create an image.

...

What affects exposure? (3)

Three variables affect exposure: aperture, shutter speed and sensitivity (or ISO). That's what called the exposure triangle.(1) is nearly true (it's actually the amount of light per unit area, or 'density of the light' - but that's more of a quibble) but if (1) is true then (3) cannot be, because ISO has no effect on the amount of light reaching the photosensitive material. Aperture, shutter speed do, also scene luminance. So, wrong from the start. Sorry.

It's awaiting approval, let's see if they do.

Rejected. Colour me unsurprised. These purveyors of nonsense rarely enjoy having their pearls of wisdom questionsed.

As seen in this forum, the less people know, the more confident they know everything.

-

@SrMi has written:@bobn2 has written:@bobn2 has written:Quoted message:

This article contains quite a few major errors, unfortunately. Let' start with the first, try to settle that then move on. At the start you say:

What's exposure? (1)

It's the amount of light that reaches a photosensitive material (i.e. the film or your camera's sensor) to create an image.

...

What affects exposure? (3)

Three variables affect exposure: aperture, shutter speed and sensitivity (or ISO). That's what called the exposure triangle.(1) is nearly true (it's actually the amount of light per unit area, or 'density of the light' - but that's more of a quibble) but if (1) is true then (3) cannot be, because ISO has no effect on the amount of light reaching the photosensitive material. Aperture, shutter speed do, also scene luminance. So, wrong from the start. Sorry.

It's awaiting approval, let's see if they do.

Rejected. Colour me unsurprised. These purveyors of nonsense rarely enjoy having their pearls of wisdom questionsed.

As seen in this forum, the less people know, the more confident they know everything.

What gets me is that there is a very obvious logical fallacy right there at the beginning - yet they wrote it and people seem to read it uncritically.

This bit seems particularly relevant to this thread: - at the endQuoted message:How do you know if the dynamic range of the scene exceeds that of the camera?

I guess after this section you'll be asking yourself:"And how can I know if the dynamic range of the scene "fits" in my camera?"

It's super easy, just check out the histogram. :P

Edit: Just added another comment - posting it here so I don't lose it when he rejects it.

Quoted message:You seem to have rejected my previous comment. If this is just your SW taking it's time, then forgive me for jumping to assumptions. However, as I pointed out in that comment, you have an obvious logical fallacy right there in the opening section. Exposure cannot both have the definition that you give and be affected by ISO.

I know you try to square this triangle later when you say 'Therefore, when you read on the internet that "the higher the ISO the more light the sensor captures", it's not referring to the amount of light it captures, but to the amplification of the signal from the light itself. Therefore, when the signal is amplified too much (high ISOs), noise appears in the photo." But actually you are simply amplifying the error, pointing out that ISO does not affect the amount of light. (The definition of ISO is erroneous also, by the way).

I think it's important that information sites such as yours do not spread disinformation. The result of your site and many others doing similar is that a generation of photographers does not know what exposure is or how it works. I'm very happy to discuss in detail so you can improve your site, but you have to be willing to discuss at all, in the first place. -

@bobn2 has written:

Don was talking about small-signal performance,

graduation acuracy of the voltage determines the acurate graduation of colour.

-

@SrMi has written:@DonaldB has written:@JohnSheehyRev has written:@DonaldB has written:

I also remember when i compared a straight out of camera shot from my a7r2 and a 8 shot hi res shot 50 meg image from my em1mk2 again the a7r2 won easily.

So you're saying that with about twice the total light, the m43-derived image was still noisier? I am not sold on the idea that you knew what you were looking at, unless there was some large-scale fixed pattern noise in the m43 that did not cancel with the pixel shifts, or the m43 50MP image was gratuitously oversharpened at the pixel level.

In this example, the m43 image (EM...) received half the light of the FF image (DSC...).

i think he is refering to 8 images to make the 50 meg file is, 2x the light.

-

@DonaldB has written:@bobn2 has written:

Don was talking about small-signal performance,

Acuracy in the measurement of the voltage determines the acuracy of colour.

So yes, small signal performance. But what you said is not quite right on several levels, but let's take as a given that accuracy of the measurement determines accuracy of the colour. In that case what would be important is accuracy of measurement of the charge, not the voltage - the voltage is just an intermediary - its absolute value is unimportant. Plus, accuracy of its measurement does not depend on its magnitude. And smaller pixels can provide a more accurate measurement.

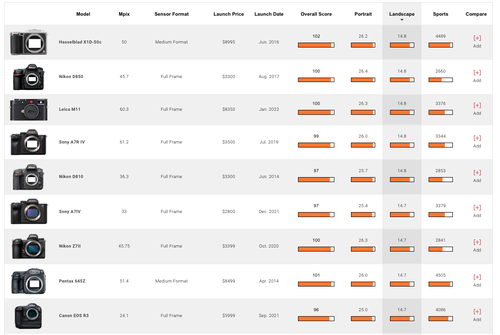

Suppose we have a pixel with a saturation capacity S and read noise r. The DR (i.e. how many separate values of that pixel output can be measured) is S/r. Now we make another sensor with pixels half the linear dimension, 1/4 the area. We're going to make these new pixels simply by taking the reticles for the old pixel and scaling to 1/2 the linear dimension (This never happens in practice, where a completely new design would likely be adopted for that large a scale - but for the purposes of thinking about what are the intrinsics it's possibly a sensible simplification). The scaling means that the capacitance of the pixel is reduced by a factor of four, which in turn means 1/4 of the saturation level and four times the conversion gain, which controls the input referred read noise. Now the saturation level is S/4 and the read noise is r/4. If we combine the signal from these four pixels the saturation is correlated. so adds - the read noise is uncorrelated so adds in quadrature. So the combined saturation is 4S/4 = S, whilst the combined read noise is √(4(r/4)²) = 2*(r/4) = r/2. The DR at the resolution of the original sensor is S/(r/2) = 2S/r. So by halving the linear size of the pixel we've doubled the DR. Of course, as said, that's not in practice how sensors are designed, so we don't see the full advantage of reducing pixel size - but nonetheless the trend is there. If your thesis was correct, the highest DR cameras would tend be the low pixel count ones of a given sensor size, but results tend to show the opposite. For instance ranking DxOMark's tests by DR we find:

Of course DR is affected by quite a few things as well as pure pixel performance, such as - but we certainly are not seeing a clustering of large pixel cameras at the top. -

@bobn2 has written:

The DR (i.e. how many separate values of that pixel output can be measured) is S/r.

Bob,

Are you sure that is correct? Or have you simplified it? Something doesn't feel right to me.

Alan

-

@AlanSh has written:@bobn2 has written:

The DR (i.e. how many separate values of that pixel output can be measured) is S/r.

Bob,

Are you sure that is correct? Or have you simplified it? Something doesn't feel right to me.

AlanIt's a bit simplified, in that I have bundled a load of noises into 'read noise' - but it's essentially correct. DR is generally 'maximum signal/noise floor', and in this case 'noise floor' is dominated by read noise. Or maybe you're not sure about DR being how many separate values can be measured - that's essentially what DR tells you. The size of the noise floor tells you how big a range a 'distinct' value takes and the maximum divided by that tells you how many distinct values there are. It's a bit more complex in photography due to shot noise, which means that most of the 'distinct values' that would be available in a classic DR become indistinct.