sometimes there are no other options because manufacturer does not make the body that you want with all other bells & whistles within you price range with less mp

-

-

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

I have a Panasonic m4/3 DC-G9 which be set 80 MP in pixel-shift mode. But the MTF curve for pixel-shift captures is pretty poor and the popular MTF50 occurs at a low spatial frequency relative to Nyquist. Which means that I don't need an Otus 55mm for that camera, or any of mine for that matter.

On the other hand, I can almost stick any old lens on SD9 with it's 9.12um pixel spacing ... 😀

-

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

-

LOL. With respect, that is a very vague statement! “On axis” says nothing about the majority of the area of a photo, “optimal aperture” is also rather restrictive, and “most decent lenses” needs definition: how many is “most”, and what is the criterion for “decent”?

I think it more appropriate to compare the results of using a given lens with 40MP and 20MP sensors of “agreed” quality. 😀

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

-

@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

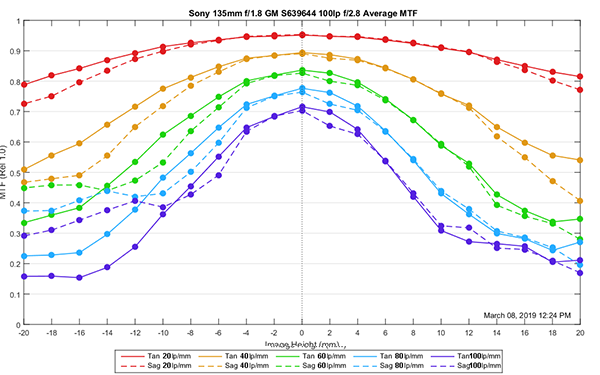

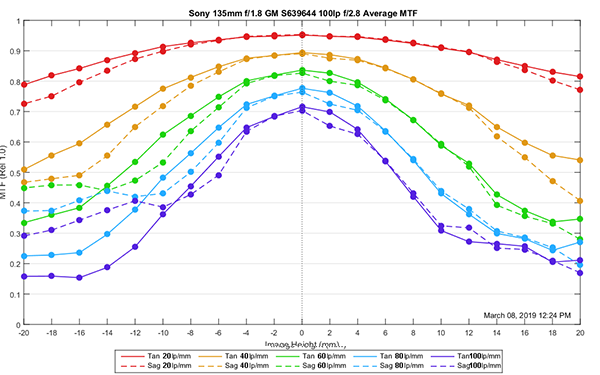

Indeed, look at this Sony at f/2.8 giving well over MTF50 on-axis!

Don't know if that's "optimum aperture" for that f/1.4 lens and don't know the MTF curve for "a 3 um sensor" ...

Image by Roger Cicala www.lensrentals.com/blog/2019/03/sony-fe-135mm-f1-8-gm-early-mtf-results/

-

@davidwien has written:@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

LOL. With respect, that is a very vague statement! “On axis” says nothing about the majority of the area of a photo, “optimal aperture” is also rather restrictive, and “most decent lenses” needs definition: how many is “most”, and what is the criterion for “decent”?

I think it more appropriate to compare the results of using a given lens with 40MP and 20MP sensors of “agreed” quality. 😀

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

-

@xpatUSA has written:@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

Indeed, look at this Sony at f/2.8 giving well over MTF50 on-axis!

Don't know if that's "optimum aperture" for that f/1.4 lens and don't know the MTF curve for "a 3 um sensor" ...

Image by Roger Cicala www.lensrentals.com/blog/2019/03/sony-fe-135mm-f1-8-gm-early-mtf-results/

I would like to see the MTF below .1 at the sampling frequency for a monochrome sensor. Lower for a Bayer CFA.

-

@JimKasson has written:@davidwien has written:@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

LOL. With respect, that is a very vague statement! “On axis” says nothing about the majority of the area of a photo, “optimal aperture” is also rather restrictive, and “most decent lenses” needs definition: how many is “most”, and what is the criterion for “decent”?

I think it more appropriate to compare the results of using a given lens with 40MP and 20MP sensors of “agreed” quality. 😀

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

This is part of a photo taken with a7Rmk4, 3,76 micrometer pixels. The aperture was between 8 and 11, so the diffraction was in the play too.

What I see in the picture is aliasing if I understand how aliasing looks in pictures.

I'll bet aliasing was visible in picture taken with 3,1 micrometer pixel pitch sensor.

That means the lens outresolves the sensor? -

@JimKasson has written:

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

Well, let me put it another way: it is a very limited statement in terms of actually taking a photo outside of the lab.

David

-

@davidwien has written:@JimKasson has written:

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

Well, let me put it another way: it is a very limited statement in terms of actually taking a photo outside of the lab.

David

The aliasing improvements in real world photography when switching from the 50 MP to the 100MP 33x44mm sensor was something I found important in my photography. But I still see aliasing with the GFX 100x and the Hasselblad X2D.

-

@davidwien has written:@JimKasson has written:

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

Well, let me put it another way: it is a very limited statement in terms of actually taking a photo outside of the lab.

That's right. All test photos should be of like shrubbery and rolling hills, hand-held and JPEG with no EXIF to muddy matters.

-

@IliahBorg has written:@DonaldB has written:

stand back from the subject 10 or 20 feet and do the spin the camera upside down.

This is known for ages to be a very crude and non-reliable way of testing, and it doesn't allow to select the best lens sample among three.

agree if you are blind or have no commonsense or practical nous. 🤔😜

-

@davidwien has written:

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

agree totally, went through this process setting up a recording studio for my daughter. high qulaity studio monitors and headphones are parramount.

-

@DonaldB has written:@IliahBorg has written:@DonaldB has written:

stand back from the subject 10 or 20 feet and do the spin the camera upside down.

This is known for ages to be a very crude and non-reliable way of testing, and it doesn't allow to select the best lens sample among three.

agree if you are blind or have no commonsense or practical nous. 🤔😜

Look, Aristotle!

-

@davidwien has written:@JimKasson has written:

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

Well, let me put it another way: it is a very limited statement in terms of actually taking a photo outside of the lab.

David

It is easier to produce aliasing in a lab, but you don't need a lab to produce aliasing. As long as you have sufficient optical sharpness and camera/subject stability relative to pixel density, aliasing is always possible. What's so annoying about aliasing is that for any given sensor, the sharper the lens that you use and the more stable things are, which should be good things, the more likely you are to get visible aliasing.

Every Bayer capture that is moderately pixel-sharp has aliasing in the red and blue channels if the subject matter has aliasing potential; the raws are generally converted to "play dumb" with pixel-level color detail and hide the wild chromatic artifacts of color aliasing. This is even true with sensors with AA filters, because the point spread of the AA filter is small relative to the pixel spacing in the red and green channels. If it were big enough to greatly reduce aliasing in the red and blue channels, individual points of light could get ghosted into 4 distinct visible points in the implied luminance channel.

I think it is more productive to think in terms of the frequency of artifacts for any given usage and equipment, rather than "real world" vs "lab". How much can a lab improve over real world for stability, using a relatively wide lens and f/4 in sunlight at 1/1000? Why talk "real world vs lab" when one can easily tell the full story of "hand-held after too much caffeine vs tripod"?

-

@TimoK has written:@JimKasson has written:@davidwien has written:@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

LOL. With respect, that is a very vague statement! “On axis” says nothing about the majority of the area of a photo, “optimal aperture” is also rather restrictive, and “most decent lenses” needs definition: how many is “most”, and what is the criterion for “decent”?

I think it more appropriate to compare the results of using a given lens with 40MP and 20MP sensors of “agreed” quality. 😀

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

This is part of a photo taken with a7Rmk4, 3,76 micrometer pixels. The aperture was between 8 and 11, so the diffraction was in the play too.

What I see in the picture is aliasing if I understand how aliasing looks in pictures.

I'll bet aliasing was visible in picture taken with 3,1 micrometer pixel pitch sensor.

That means the lens outresolves the sensor?I see no aliasing here.

I would expect aliasing to show up most clearly on a photo of a city scene, with plenty of slanted straight edges on buildings.Don

-

@DonCox has written:@TimoK has written:@JimKasson has written:@davidwien has written:@JimKasson has written:@xpatUSA has written:

[quote="@davidwien"]

If you have a 40 Mp sensor, you need lenses that are good enough to take advantage of it. Otherwise why have it?

😀

DavidGood point. What lenses are "good enough to take advantage of [40 MP]" ?

On axis at optimal aperture, most decent lenses will be able to lay down detail a 3 um sensor can’t properly resolve.

LOL. With respect, that is a very vague statement! “On axis” says nothing about the majority of the area of a photo, “optimal aperture” is also rather restrictive, and “most decent lenses” needs definition: how many is “most”, and what is the criterion for “decent”?

I think it more appropriate to compare the results of using a given lens with 40MP and 20MP sensors of “agreed” quality. 😀

Another audio analogy, though inverted: what is the point of spending thousands of £/$/¥/€ on microphones, amplifiers, etc, if the result is to be played on a boom box? One expects to match the quality of a lens to the recording abilities of the camera: the glass bottoms of Coca Cola bottle are not appropriate as lenses for Alan‘s camera. 😀

Unfortunately, reviews seem not to make recommendations of suitable lens/camera combinations.

David

It is quite a specific statement. Ideally, sensors have a sampling frequency high enough that there is no aliasing. We are a long way from that.

This is part of a photo taken with a7Rmk4, 3,76 micrometer pixels. The aperture was between 8 and 11, so the diffraction was in the play too.

What I see in the picture is aliasing if I understand how aliasing looks in pictures.

I'll bet aliasing was visible in picture taken with 3,1 micrometer pixel pitch sensor.

That means the lens outresolves the sensor?I see no aliasing here.

I would expect aliasing to show up most clearly on a photo of a city scene, with plenty of slanted straight edges on buildings.Don

I see aliasing, even though there is a bit of softness in the image. In this case, it would be pretty easy to deal with in post.

-

@JimKasson has written:

I see aliasing, even though there is a bit of softness in the image. In this case, it would be pretty easy to deal with in post.

F/11 is enough to suppress aliasing effectively — but not completely — with a 3.76um sensor.