You guys... I haven't seen such spread mess for a long time. How about coherrent sample and walk through it in one post? Anyone? Would it help solving anything if I did it?

-

-

-

It's already been done earlier this thread.

-

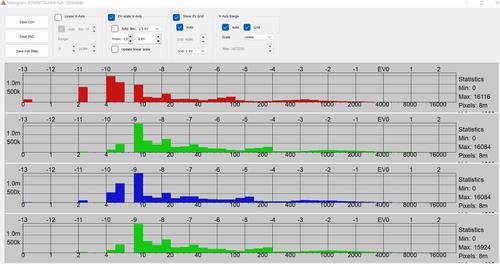

The raw histogram shows the reds in the raw data are 2 and a bit stops underexposed. The greens are about 1 stop underexposed and the blues are about 2 stops underexposed.

This shows you could have potentially added 1 stop of exposure* thus increasing the SNR and then lowering the image brightness in post by 1 stop. This would have resulted in an image with the same image lightness as the image you posted but with less visible noise as per the example explanation in my previous post.

* exposure - amount of light striking the sensor per unit area while the shutter is open

** optimal exposure - the maximum exposure* within dof and motion blur requirements without clipping important highlights.

*** under exposed - more exposure* could have been added with the DOF and blur constraints still being met without clipping important highlights. -

please be my guest 😁lets see if you can get another 200 conflicting responses to a very basic question i asked. which BTW still isnt answered.

-

So there is still stuff unsolved,not agreed upon, and confusion? That's rough.

Okay, please let me assure myself which question do you mean to be the original one. I do not want to make a mistake here. Is it the one in the topic name?

-

Warned where? Not in our mediation with Admin. In the mediation you complained about being stalked and I agreed to stop referring to you as a fool etc. in posts, which I have done

I also said in the mediation that if you continue to post erroneous information I will continue to correct it just like everyone else is free to do.

You are that you have been given incorrect answers even after Iliah Borg posted you were given correct information.

There is nothing wrong with pointing out that your opinions are not correct.

-

Good luck 🙂 but if you look through this entire thread you will see that all of DonaldB's questions including the op have been answered correctly. For some reason he either still doesn't understand them or doesn't want to accept them.

-

Quite frankly, as a dev engineer and geek in electronics and cameras, with C1/C2 understanding of english language, I have very hard time chewing through such sentence. It is not obvious at all, what is the hierarchy of the sentences/clauses without proper comma divisions. That way, one can derive multiple meanings to it. I did chomp through that, but it took me five rounds to be very sure.

You are right, but it is not an easy subject. It might need some more basic approach for people to understand such complex phenomena/subject/approach/instruction, all at once in fact. Do not think that you are not clever and fast with that. Maybe too fast. Expecting someone to get it, might not be the right course of action. I also felt like losing patience with the OP (I.E.: leaving the thread), but now I see something different.

//Edit: Questions were answerred correctly, but even for an understanding person, it gave me a headache to read through, and especially to understand, and let's say "agree". I cannot see anyone with lesser degree of already internalised understanding, to understand this communication.

OP is little slow or tough nut, maybe, but I don't see you coming forward to that. -

Yes topic name. im still working so i will watch from the side line and hope i dont screw up my factory drawings 😁

-

Great.

For the topic name alone:

Raw histogram is a graph of representative numerical data captured by the camera sensor.

What data? An amount of saturation levels of individual pixels of the camera sensor. Or vice versa, amount of pixels saturated at each level of possible saturation level. Horizontal plane of the histogram shows you saturation levels, vertical plane shows you amount of pixels saturated at that particular level.Therefore left part of the histogram represents dark toned parts in the image, right side shows you bright tones in the image. The fuller the graph is in vertical plane, the more pixels are occupying these saturation levels.

If the pixel gets more light than what it can capture, it stays fully saturated on it's highest value, and you will see it as a most right value on the histogram, no matter how much more light over the full capacity it received. So if you see a lot of pixels on the most right side, there is high possibility, that the sensor was oversaturated, and the real image information is lost, as it didn't manage to capture this amount of light. RAW histogram gives you means to avoid that for next time, if you take proper action towards that goal.

It also lets you to saturate the sensor more, if it is less than ideally saturated.Most image processing softwares do not show you RAW histogram, they show you either pre-processed image histogram or output result histogram.

Why does it matter?

It matters because it allows you to best evaluate and use the data captured. And possibly to capture more data next time. For realistic use, it allows you to best exploit camera sensor resources and capabilities, giving you most image quality to operate with. Using other than RAW data allows for misrepresentation or misuse a lot more, causing you to leave data and image quality unused/ruined.For the image posted:

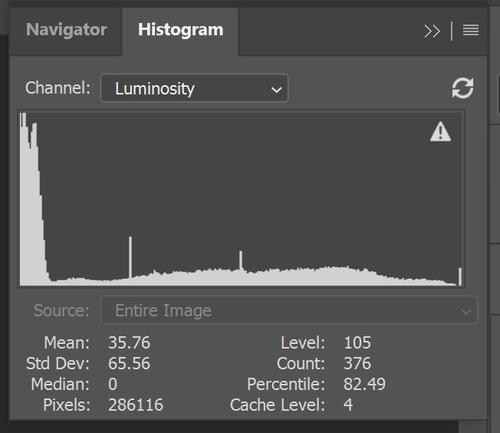

We have no way of knowing which one is RAW, or if any of these are RAW. There is high possibility that none of these are RAW histograms due to the program used. This program is not a tool showing you RAW histograms. These are the tools to process RAW images, showing you the histogram of the processed output.The graph that shows you the peak being cut off significantly, does not allow for all data being presented to you, because the vertical range of the graph is limited. It's the graph display issue, not the captured data issue.

If anything is unclear, feel free to point that out for further explanation.

-

here is a raw file. have a look and tell me how close i am to the perfect exposure.

-

@CrashpcCZ has written:

Most image processing softwares do not show you RAW histogram, they show you either pre-processed image histogram or output result histogram.

I understand totally this statement. but the image i just linked is exposed from the from in-camera histogram information and i would like to know if a raw histogram has any benefit over my in-camera histogram. and if so ( im skeptical) then by how much.

-

@DonaldB has written:

here is a raw file. have a look and tell me how close i am to the perfect exposure.

You have been shown many time this thread using your own posted raw histograms how to see how much underexposed the raw data is.

You can do this by yourself now unless your intention is to troll.

How much underexposure do you see in the actual raw data of the file you arrached?

-

@DonaldB has written:@IanSForsyth has written:@DonaldB has written:

so answer my question , why is the raw histogram important compared to the in-camera histogram ?

The raw histogram should be telling you why, looking at the raw histogram it shows that for that raw file you are underexposing by 1 stop half the amount of light your camera can store, while your cameras histogram does not shot this and that your red and blue channel captured the same amount of light when they did not

im shooting myself in front of a pure white background in my studio wearing white/ blue stripped shirt and blue shorts.

Did it hurt?

-

@DannoB has written:@DonaldB has written:

here is a raw file. have a look and tell me how close i am to the perfect exposure.

You have been shown many time this thread using your own posted raw histograms how to see how much underexposed the raw data is.

You can do this by yourself now.

How much underexposure do you see in the actual raw data of the file you arrached?

please let the guy draw his own conclusions.

if you want to enter this discussion then post your own perfectly exposed raw images with a raw digger and a photoshop histogram. -

@Robert1955 has written:@DonaldB has written:@IanSForsyth has written:@DonaldB has written:

so answer my question , why is the raw histogram important compared to the in-camera histogram ?

The raw histogram should be telling you why, looking at the raw histogram it shows that for that raw file you are underexposing by 1 stop half the amount of light your camera can store, while your cameras histogram does not shot this and that your red and blue channel captured the same amount of light when they did not

im shooting myself in front of a pure white background in my studio wearing white/ blue stripped shirt and blue shorts.

Did it hurt?

😂🤣😂🤣 after starting this thread ,that might be a good out come 😁😂🤣

-

@Robert1955 has written:@DonaldB has written:@IanSForsyth has written:@DonaldB has written:

so answer my question , why is the raw histogram important compared to the in-camera histogram ?

The raw histogram should be telling you why, looking at the raw histogram it shows that for that raw file you are underexposing by 1 stop half the amount of light your camera can store, while your cameras histogram does not shot this and that your red and blue channel captured the same amount of light when they did not

im shooting myself in front of a pure white background in my studio wearing white/ blue stripped shirt and blue shorts.

Did it hurt?

Well......he's still posting so I guess he missed 😉