OK, last try at explaining RAW Histograms (in post processing) and why they matter, as simply as possible..

This time with pictures and as little techno-speak as possible. Hopefully this will help make some sense of the previous discussion. I walked outside and shot this not so exciting flower for this purpose - no great art here, just an example that will have some relevance to the discussion.

I exposed this with the most important highlight detail being recorded all the way "to the right" (ETTR), just below clipping. How do we know it's just below clipping/ Read on.

Here are the initially poor results...

The RAW file with default Lightroom import processing is on the left, the SOOC jpeg is on the right, with their respective Lightroom histograms below them. Hmm, the Lightroom histograms do not show any highlight clipping for either image yet, though the camera did better, there clearly appears to be a significant loss of highlight detail with both.

What's going on here? Well, after all the preceding discussion it should have been made clear by now that the histograms we see in our editors (most, but not all editors) are only representative of the images we are looking at on the screen after processing, and does not show what information is present in the RAW file....Hey, wait a minute, I didn't apply any processing, that's just how it opened.. while it’s true that no sliders were moved in the development of that image, if you're looking at an image from a RAW file in your editor, significant processing has already been applied to the RAW file "under the hood" before you ever get to see it and, in this case, it messed some stuff up.

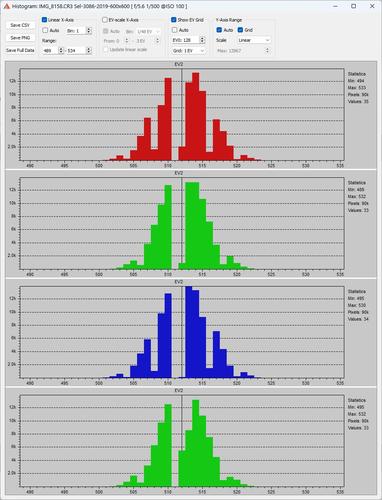

Let's look at the RAW Histogram for this file (via RawDigger, thanks Iliah) that is representative of the information present in the RAW file...

![2023-04-15 16_15_06-Histogram_ XT2S6465.RAF Full-6032x4028 [ Fujifilm X-T2 f_4.0 1_180 @ISO 200 ].jpg](/a/thumb/XP86vq6cHybeXUX2us9bq5JnqrkthtILnPWpN2kej7fW5enqpUogcNkvpC0tSqLX/3554/?shva=1)

As can be gleaned from this, all 3 three channels are intact. the Green channel is maxed out and extends all the way up to, but not significantly into clipping. It's generally considered wise to leave yourself something of a safety buffer, but here we have pretty well maxed-out the exposure for this image. If there was significant highlight clipping present, you would see a spike at the clipping threshold at right side of the histogram (just below 16000). Also, note where the EV 0 line is here (Donald B), for clarity, I moved it from the usual -3 EV to where the clipping threshold actually is (it's really just a handy scale to use as you see fit.)

So, the if RAW highlight detail wasn't clipped and the exposure was OK, why does the flower look like crap? Because both the in-camera processing and Lighroom's default import processing both got it wrong - usually Lightroom's (and other editors') default import processing is easy enough to work with, but sometimes it really makes a mess of things and you have to work around it (a subject for another thread). A RAW histogram won't fix any of this for you, but it can be a great diagnostic tool for troubleshooting potential exposure problems (as we did here), and can (with some trial and error) be invaluable for dialing in a reliable methodology for making precisely controlled RAW exposures (this example took me exactly one try).

My exposures are almost always as expected now, so RawDigger isn't something I use regularly, but what a great tool for helping make that previous sentence a reality and, of course, it's especially useful for making sense of any sort of exposure issues.

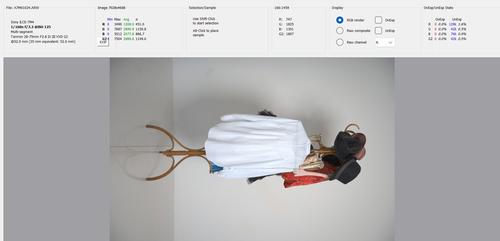

Here's a before and after with the same RAW file, again with the Lightroom histograms below each one. Note that despite the very significant difference in highlight detail between the two images, the two Lightroom histograms don't look all that dissimilar...

On the left is the same inexplicably blown RAW default import processing result. On the right is the same file but with different Lightroom development settings ...and how about that, the RAW Histogram was correct, there was viable highlight detail after all (but it's still not an especially good photo).

I hope some of that made sense.

![2023-04-15 16_15_06-Histogram_ XT2S6465.RAF Full-6032x4028 [ Fujifilm X-T2 f_4.0 1_180 @ISO 200 ].jpg](/a/thumb/XP86vq6cHybeXUX2us9bq5JnqrkthtILnPWpN2kej7fW5enqpUogcNkvpC0tSqLX/3554/?shva=1)