There's nothing complicated here; if you separate a 3-channel raw image into 3 greyscale images, you are seeing simply that; nothing is interpreted, color-wise, in the creation of the raw file; the file simply also has metadata that tells a converter how to derive realistic color from the differences between those 3 greyscale images.

-

-

@JohnSheehyRev has written:@DonaldB has written:@GreatBustard has written:@MarshallG has written:

So for the most part, Canon, Sony and Nikon “colors” are present in the JPEG files produced by the cameras but only to a minor extent present in the RAW files.

RAW files don't have color. Color is created in the conversion process, and different RAW converters will create different colors.

thats not true 🤪 the voltages are measured from the coloured filter/diode. and i believe every sensor maker has there own colour mixtures.

There's nothing complicated here; if you separate a 3-channel raw image into 3 greyscale images, you are seeing simply that; nothing is interpreted, color-wise, in the creation of the raw file; the file simply also has metadata that tells a converter how to derive realistic color from the differences between those 3 greyscale images.

Some might find that a bit puzzling - especially "the differences between". I think it has more to do with ratios between levels extracted by matrices or LUTs.

Example matrix, sensor outputs to XYZ:

camRed camGreen camBlueX 0.14759 0.65183 -0.13036

Y -0.83358 2.43696 -0.93664

Z 0.60299 -2.61102 2.7869From a Foveon-based camera - for a CFA-based camera, the coefficients would be much less extreme.

-

[deleted]

-

@GreatBustard has written:@xpatUSA has written:@GreatBustard has written:@MarshallG has written:

So for the most part, Canon, Sony and Nikon “colors” are present in the JPEG files produced by the cameras but only to a minor extent present in the RAW files.

RAW files don't have color. Color is created in the conversion process, and different RAW converters will create different colors.

Are you sure?

This is a raw composite (no conversion) image exported from a Lumix G9 raw file:

Perhaps we are talking at cross-purposes. It is obvious that without a CFA over the photocells the sensor outputs would have no color ... but the raw file does include the effect of the CFA as can be seen above.

But what you posted was a jpg, so, naturally, it has color.

The posted image was converted from a TIFF which looked no different on my computer. The TIFF was a raw composite with no conversion whatsoever. Therefore, I fail to see your point, sorry.

Quoted message:But, assuming we could view a RAW file, wouldn't it be a bunch of pixels that are all entirely red, green, or blue?

No. That is overly colloquial.

Quoted message:And wouldn't the hue of the red, green, and blue necessarily correlate to the dyes in the CFA?

Sorry, I don't understand the question.

-

@JACS has written:

Sure. My point was that this is the starting point: to understand the data. We can’t just say - as long as you shoot RAW and process to taste, you get any colors you like. This is kind of true but it doesn’t mean that you get anything close to the actual ones or that you can always get the same output from different cameras. Then the next step is to understand what the goal is and how it is possibly achieved. The way I see it, the data is a projection of the infinitely dimensional space of spectra to a 3D space determined by the camera+lens, a priori dependent of the latter, and not quite the same as the 3D space of our eyes.

I would say that there is a step before understanding the data, which is understanding the information - that is what the data represents. I would argue with the concept of 'actual colours'. Colours are something that don't exist in the physical world, there are only photon energies (wavelengths of light). Colours are entirely part of human perception. This is an instance of an often misunderstood concept, about which I bang on frequently. The misunderstanding ultimately underpins many of the fallacies surrounding photography. The misunderstanding is that the input and output of the photographic process are both about light. This is wrong. The input is about light, and for colour photography, light separated into different energy bands. The output is about human perception - it is a recipe that specifies how a human being should perceive the scene. It's on this point that once can argue that a raw file doesn't encode 'colour' - rather it encodes light energy in different frequency bands. We generally use three frequency bands (though that's not a necessity) but they don't contain all of the information necessary to create the output image. That is because human perception is adaptive to lighting conditions, so we can't translate to an XYZ (perceptual) space without some information about the lighting conditions. To some extent that information will be in the metadata, in terms of the 'colour temperature' - but this is a very rough and ready indicator. Essentially, it assumes that the lighting is provided by a black-body radiator at a particular temperature. Many actual illuminants don't follow those rules, and thus we get metamerism errors in many translations.

@JACS has written:The goal is to create another 3D output which, presented to our eyes, would look like the actual spectrum more or less without being it. This cannot be done exactly, so it is a kind of an optimization problem with additional constraints, and tweaks of the cost function, like the DR of our monitors, what might look nicer rather than more accurate, etc.

Not quite, because the gamut of perceptual hues is larger than the spectral hues. Magenta, for instance, is a hue that doesn't occur in the spectrum. The reason for this is that human vision is not measuring the wavelength of light. It's just looking at the magnitude of a stimulus in three different (and overlapping) wavebands. It's made somewhat worse because mammalian tri-stimulus vision is a later adaptation of bi-stimulus vision, whereby the lower frequency band split into two, leaving us with an 'S' band well separated form the largely overlapped 'M' and 'L' bands. Birds (and presumably dinosaurs before them) have quadri-stimulus vision separated into nice evenly spaced bands. That's one reason dinosaurs never invented colour photography.

-

[deleted]

-

@bobn2 has written:

Magenta, for instance, is a hue that doesn't occur in the spectrum. The reason for this is that human vision is not measuring the wavelength of light. It's just looking at the magnitude of a stimulus in three different (and overlapping) wavebands.

Isn't it also because the S band is also sensitive to some red? So in effect even as you move to the ends of the spectrum you are still creating a signal across two types of cone.

@bobn2 has written:It's made somewhat worse because mammalian tri-stimulus vision is a later adaptation of bi-stimulus vision, whereby the lower frequency band split into two, leaving us with an 'S' band well separated form the largely overlapped 'M' and 'L' bands.

In the days before the eye even developed the lens. The question to bear in mind is how colour vision developed, and it's because it gives us a greater chance of survival this way not because light does so and so.

@bobn2 has written:That's one reason dinosaurs never invented colour photography.

Imagine the forum arguments, bloodbath...

-

@JACS has written:

First, I did not even mention "color." Next, when I talk about spectra, I mean spectral densities. I even forget often that some people have this naive idea that we see spectral colors or individual wavelengths. The 3D projection I mentioned consists of 3 weighted averages of the spectral density being imaged.

OK

-

@Andrew564 has written:@bobn2 has written:

Magenta, for instance, is a hue that doesn't occur in the spectrum. The reason for this is that human vision is not measuring the wavelength of light. It's just looking at the magnitude of a stimulus in three different (and overlapping) wavebands.

Isn't it also because the S band is also sensitive to some red? So in effect even as you move to the ends of the spectrum you are still creating a signal across two types of cone.

The S cone's response is essentially 0 at 550nm and below, so no, it has no significant response in 'red'. The 'L' cone's response peters out at 400nm at the short end, well into the 'violets'. Magenta is caused by an essentially equal stimulation of S and L cones, with minor stimulation of M cones, impossible with a single wavelength.

@Andrew564 has written:In the days before the eye even developed the lens. The question to bear in mind is how colour vision developed, and it's because it gives us a greater chance of survival this way not because light does so and so.

I'm not sure that without the lens (single or compound) it's 'vision' as we'd understand it. There's an interesting discussion here.

-

@bobn2 has written:

The S cone's response is essentially 0 at 550nm and below, so no, it has no significant response in 'red'. The 'L' cone's response peters out at 400nm at the short end, well into the 'violets'. Magenta is caused by an essentially equal stimulation of S and L cones, with minor stimulation of M cones, impossible with a single wavelength.

Yep, other way round, but the ends overlapping without much from the middle.

-

@JACS has written:

BTW, here is some empirical evidence of color differences. I am not going to speculate about the reasons. It is something I observed with Panasonic, but not with other brands (I observed different things for them).

The reds jump on you even if the images are processed with ACR. This is something I see right away, and cannot unsee it. BTW, if direct linking is not desired, please let me know.

========================

The red jacked, the red sign on the top right, the orange bag on the left. The green traffic lights or the blues are not that saturated:

I actually do not find this an extreme example, though I have had trouble with reds from certain raw files when processed in a particular program, and am therefore sensitised to the red/orange problem. Actually, I have looked carefully at traffic lights and the reds and greens are actually quite strident in real life, probably due to the LED illumination used for them these days. I find the same with the rear lights of modern cars. In the picture above, the red jacket stands out anyway, because everyone else is dressed in rather drab colours.

In fixing my problem, referred to above, it was not a need to reduce saturation, but luminance, for the strident red/orange issue.

David

-

@davidwien has written:@JACS has written:

BTW, here is some empirical evidence of color differences. I am not going to speculate about the reasons. It is something I observed with Panasonic, but not with other brands (I observed different things for them).

The reds jump on you even if the images are processed with ACR. This is something I see right away, and cannot unsee it. BTW, if direct linking is not desired, please let me know.

========================

The red jacked, the red sign on the top right, the orange bag on the left. The green traffic lights or the blues are not that saturated:

I actually do not find this an extreme example, though I have had trouble with reds from certain raw files when processed in a particular program, and am therefore sensitised to the red/orange problem. Actually, I have looked carefully at traffic lights and the reds and greens are actually quite strident in real life, probably due to the LED illumination used for them these days. I find the same with the rear lights of modern cars. In the picture above, the red jacket stands out anyway, because everyone else is dressed in rather drab colours.

In fixing my problem, referred to above, it was not a need to reduce saturation, but intensity, for the strident red/orange issue.

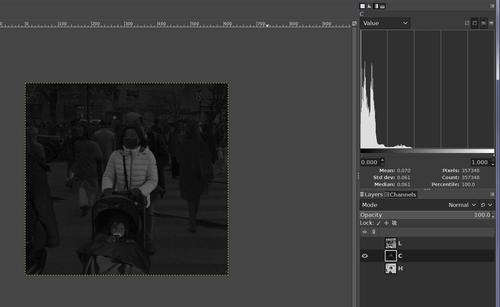

For what it's worth, here is the chroma level extracted from the above:

Certainly the red red jacket got rendered with lots more saturation than it's surroundings, whether true to the scene or not ...

-

@xpatUSA has written:@davidwien has written:@JACS has written:

BTW, here is some empirical evidence of color differences. I am not going to speculate about the reasons. It is something I observed with Panasonic, but not with other brands (I observed different things for them).

The reds jump on you even if the images are processed with ACR. This is something I see right away, and cannot unsee it. BTW, if direct linking is not desired, please let me know.

========================

The red jacked, the red sign on the top right, the orange bag on the left. The green traffic lights or the blues are not that saturated:

I actually do not find this an extreme example, though I have had trouble with reds from certain raw files when processed in a particular program, and am therefore sensitised to the red/orange problem. Actually, I have looked carefully at traffic lights and the reds and greens are actually quite strident in real life, probably due to the LED illumination used for them these days. I find the same with the rear lights of modern cars. In the picture above, the red jacket stands out anyway, because everyone else is dressed in rather drab colours.

In fixing my problem, referred to above, it was not a need to reduce saturation, but intensity, for the strident red/orange issue.

For what it's worth, here is the chroma level extracted from the above:

Certainly the red red jacket got rendered with lots of saturation, whether true to the scene or not ...

Quite so, all I am saying is that the raw file of my camera needed different correction than yours!😀

David

-

@davidwien has written:@xpatUSA has written:@davidwien has written:@JACS has written:

BTW, here is some empirical evidence of color differences. I am not going to speculate about the reasons. It is something I observed with Panasonic, but not with other brands (I observed different things for them).

The reds jump on you even if the images are processed with ACR. This is something I see right away, and cannot unsee it. BTW, if direct linking is not desired, please let me know.

========================

The red jacked, the red sign on the top right, the orange bag on the left. The green traffic lights or the blues are not that saturated:

I actually do not find this an extreme example, though I have had trouble with reds from certain raw files when processed in a particular program, and am therefore sensitised to the red/orange problem. Actually, I have looked carefully at traffic lights and the reds and greens are actually quite strident in real life, probably due to the LED illumination used for them these days. I find the same with the rear lights of modern cars. In the picture above, the red jacket stands out anyway, because everyone else is dressed in rather drab colours.

In fixing my problem, referred to above, it was not a need to reduce saturation, but intensity, for the strident red/orange issue.

For what it's worth, here is the chroma level extracted from the above:

Certainly the red red jacket got rendered with lots of saturation, whether true to the scene or not ...

Quite so, all I am saying is that the raw file of my camera needed different correction than yours!😀

David

I understand that your raw files need different correction than the image posted by @JACS.

-

@bobn2 has written:

I would say that there is a step before understanding the data, which is understanding the information - that is what the data represents. I would argue with the concept of 'actual colours'. Colours are something that don't exist in the physical world, there are only photon energies (wavelengths of light). Colours are entirely part of human perception. This is an instance of an often misunderstood concept, about which I bang on frequently. The misunderstanding ultimately underpins many of the fallacies surrounding photography. The misunderstanding is that the input and output of the photographic process are both about light. This is wrong. The input is about light, and for colour photography, light separated into different energy bands. The output is about human perception - it is a recipe that specifies how a human being should perceive the scene. It's on this point that once can argue that a raw file doesn't encode 'colour' - rather it encodes light energy in different frequency bands. We generally use three frequency bands (though that's not a necessity) but they don't contain all of the information necessary to create the output image. That is because human perception is adaptive to lighting conditions, so we can't translate to an XYZ (perceptual) space without some information about the lighting conditions. To some extent that information will be in the metadata, in terms of the 'colour temperature' - but this is a very rough and ready indicator. Essentially, it assumes that the lighting is provided by a black-body radiator at a particular temperature. Many actual illuminants don't follow those rules, and thus we get metamerism errors in many translations.

@JACS has written:The goal is to create another 3D output which, presented to our eyes, would look like the actual spectrum more or less without being it. This cannot be done exactly, so it is a kind of an optimization problem with additional constraints, and tweaks of the cost function, like the DR of our monitors, what might look nicer rather than more accurate, etc.

Not quite, because the gamut of perceptual hues is larger than the spectral hues. Magenta, for instance, is a hue that doesn't occur in the spectrum. The reason for this is that human vision is not measuring the wavelength of light. It's just looking at the magnitude of a stimulus in three different (and overlapping) wavebands. It's made somewhat worse because mammalian tri-stimulus vision is a later adaptation of bi-stimulus vision, whereby the lower frequency band split into two, leaving us with an 'S' band well separated form the largely overlapped 'M' and 'L' bands. Birds (and presumably dinosaurs before them) have quadri-stimulus vision separated into nice evenly spaced bands. That's one reason dinosaurs never invented colour photography.

Hi Bob,

Very interesting read.

Thanks.

Kindest regards,

Stany

www.fotografie.cafe -

[deleted]

-

@JACS has written:

Two remarks about this:

From a purist point of view, all we need is to recreate the original scene (to be more precise, an output that our eyes would not distinguish from it). If it is lit by mostly orange light, be it, etc. Our eyes adapt to the real scene, let them adapt to the reproduced one as well. The problem is that for whatever reason, this is not happening. WB is a crutch to compensate for the deficiencies of our media, or just to make the image more likable.

Next, the RAW converters have two sliders typically, not just the WB one; which are enough to replicate all linear transformations possible (with the brightness slider being the third degree of freedom). If there is metamerism at capture, then there will be in the output, no matter what.

Not really sure what you're trying to say here so excuse me if I get it wrong.

There is no absolute truth about an image and photography is never about the simple equation of capture that absolute truth then display it and the eye will see the same. It may give you a symmetry and a framework that allows a comparison of the real scene in terms of absolute physics, but it has little to do with what actually happens.

How do you recreate a 3D scene and it's illumination exactly? By transferring it to a piece of paper and holding it under a balanced LED light? How does this work with the additive system of your computer screen?

There is no truth in the data captured by the camera, the truth about colour only exists in the nature of human perception. Even the computer colour model is based on this, not on the physics of light. Even in a fully calibrated system the baseline is only maintaining the perception of colour under standard illumination as calibrated by the standard human eye. Do you really think that equal quantities of RG and B actually produce grey? Of course they don't, just as colour is not made up of RG and B components. It's just a perceptual model that's easy to work with. Real grey is the lack of a dominant hue, in RGB colour space it is represented as equal values and the monitor profile in the monitor driver converts that into the actual voltages that produce the same sensation of grey to the standard human eye. There is no preservation of actual data, only the preservation of perceived colour under controlled lighting.

-

[deleted]